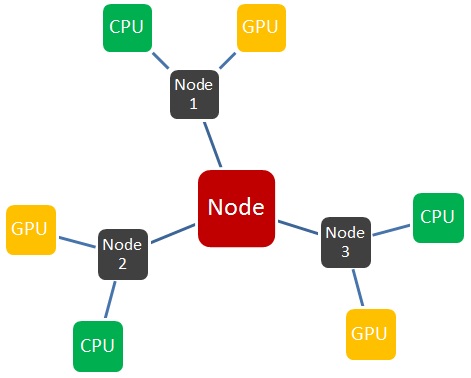

MPI is a well-known programming model for Distributed Memory Computing. If you have access to GPU resources, MPI can be used to distribute tasks to computers, each of which can use their CPU and also GPU to process the distributed task.

My toy problem in hand is to use a mix of MPI and CUDA to handle traditional sparse-matrix vector multiplication. The program can be structured as:

- Read a sparse matrix from from disk, and split it into sub-matrices.

- Use MPI to distribute the sub-matrices to processes.

- Each process would call a CUDA kernel to handle the multiplication. The result of multiplication would be copied back to each computer memory.

- Use MPI to gather results from each of the processes, and re-form the final matrix.

One of the options is to put both MPI and CUDA code in a single file, spaghetti.cu. This program can be compiled using nvcc, which internally uses gcc/g++ to compile your C/C++ code, and linked to your MPI library:

nvcc -I/usr/mpi/gcc/openmpi-1.4.6/include -L/usr/mpi/gcc/openmpi-1.4.6/lib64 -lmpi spaghetti.cu -o programThe downside is it might end up being a plate of spaghetti, if you have some seriously long program.

Another cleaner option is to have MPI and CUDA code separate in two files: main.c and multiply.cu respectively. These two files can be compiled using mpicc, and nvcc respectively into object files (.o) and combined into a single executable file using mpicc. This second option is an opposite compilation of the above, using mpicc, meaning that you have to link to your CUDA library.

module load openmpi cuda #(optional) load modules on your node mpicc -c main.c -o main.o nvcc -arch=sm_20 -c multiply.cu -o multiply.o mpicc main.o multiply.o -lcudart -L/apps/CUDA/cuda-5.0/lib64/ -o programAnd finally, you can request two processes and two GPUs to test your program on the cluster using PBS script like:

#PBS -l nodes=2:ppn=2:gpus=2 mpiexec -np 2 ./programThe main.c, containing the call to CUDA file, would look like:

#include "mpi.h" int main(int argc, char *argv[]) { /* It's important to put this call at the begining of the program, after variable declarations. */ MPI_Init(argc, argv); /* Get the number of MPI processes and the rank of this process. */ MPI_Comm_rank(MPI_COMM_WORLD, &myRank); MPI_Comm_size(MPI_COMM_WORLD, &numProcs); // ==== Call function 'call_me_maybe' from CUDA file multiply.cu: ========== call_me_maybe(); /* ... */ }And in multiply.cu, define call_me_maybe() with the 'extern' keyword to make it accessible from main.c (without additional #include ...)

/* multiply.cu */ #include <cuda.h> #include <cuda_runtime.h> __global__ void __multiply__ () { } extern "C" void call_me_maybe() { /* ... Load CPU data into GPU buffers */ __multiply__ <<< ...block configuration... >>> (x, y); /* ... Transfer data from GPU to CPU */ }Rock on \m/