Innovation Engine: Automated Creativity and Improving Stochastic Optimization via Deep Learning

Anh Nguyen, Jason Yosinski, Jeff Clune

Links: pdf | code | project page

The Achilles Heel of stochastic optimization algorithms is getting trapped on local optima. Novelty Search avoids this problem by encouraging a search in all interesting directions. That occurs by replacing a performance objective with a reward for novel behaviors, as defined by a human-crafted, and often simple, behavioral distance function. While Novelty Search is a major conceptual breakthrough and outperforms traditional stochastic optimization on certain problems, it is not clear how to apply it to challenging, high-dimensional problems where specifying a useful behavioral distance function is difficult. For example, in the space of images, how do you encourage novelty to produce hawks and heroes instead of endless pixel static? Here we propose a new algorithm, the Innovation Engine, that builds on Novelty Search by replacing the human-crafted behavioral distance with a Deep Neural Network (DNN) that can recognize interesting differences between phenotypes. The key insight is that DNNs can recognize similarities and differences between phenotypes at an abstract level, wherein novelty means interesting novelty. For example, a novelty pressure in image space does not explore in the low-level pixel space, but instead creates a pressure to create new types of images (e.g. churches, mosques, obelisks, etc.). Here we describe the long-term vision for the Innovation Engine algorithm, which involves many technical challenges that remain to be solved. We then implement a simplified version of the algorithm that enables us to explore some of the algorithm’s key motivations. Our initial results, in the domain of images, suggest that Innovation Engines could ultimately automate the production of endless streams of interesting solutions in any domain: e.g. producing intelligent software, robot controllers, optimized physical components, and art.

Conference: GECCO 2015. Best Paper Award (3% acceptance rate)

Press coverage:

Videos:

- Jeff Clune’s talk at U Wyoming

- Innovation Engine exhibition at Dublin, Ireland

Downloads: High-quality images from the paper | 10,000 CPPN images

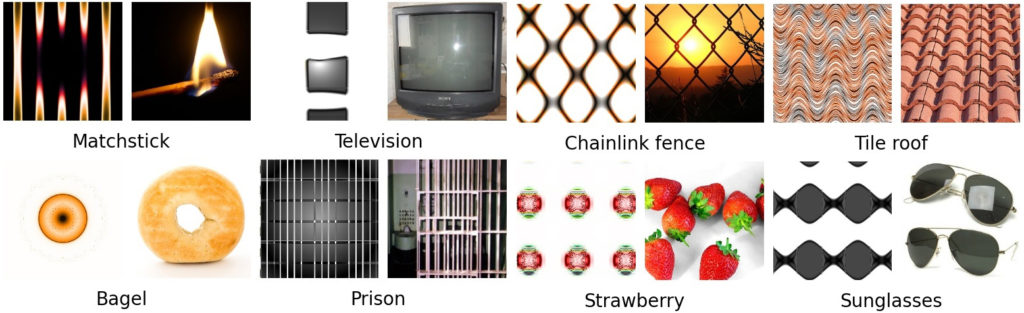

Figure 1: Images produced by an Innovation Engine that look like example target classes. In each pair, an evolved image (left) is shown with a real image (right) from the training set used to train the deep neural network that evaluates evolving images.

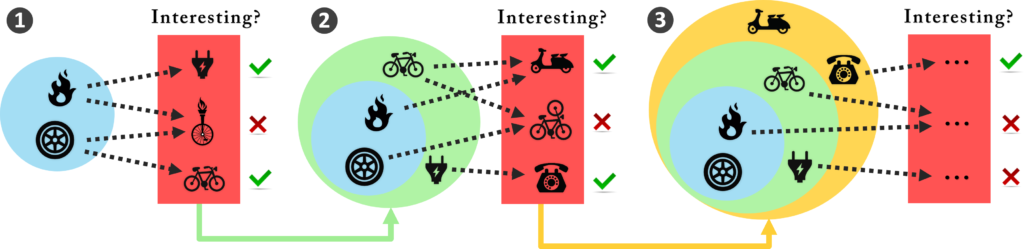

Figure 2: The Innovation Engine: Human culture creates amazing inventions, such as the telephone, by accumulating a multitude of interesting innovations in all directions. These stepping stones are collected, improved and then combined to create new innovations, which in turn, serve as the stepping stones for innovations in later generations. We propose to automate this process by having stochastic optimization (e.g. evolutionary algorithms) generate candidate solutions from the current archive of stepping stones and Deep Neural Networks evaluate whether they are interestingly new and should thus be archived.

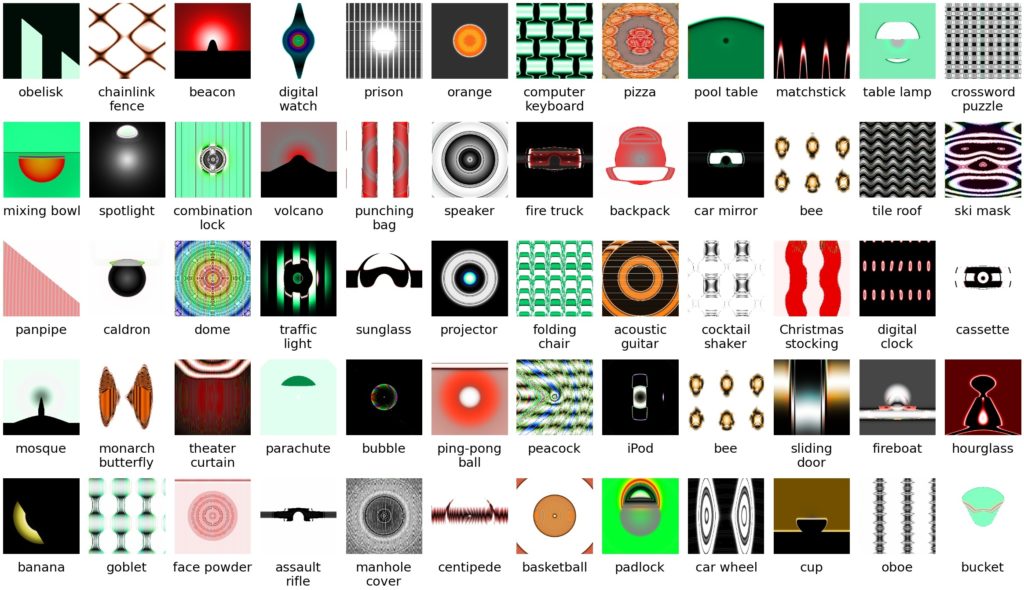

Figure 3: Innovation Engines are capable of producing images that are not only given high confidence scores by a deep neural network, but are also qualitatively interesting and recognizable. To show the most interesting images we observed evolve, we selected images from both the 10 main experiment runs and 10 preliminary experiments with slightly different parameters.

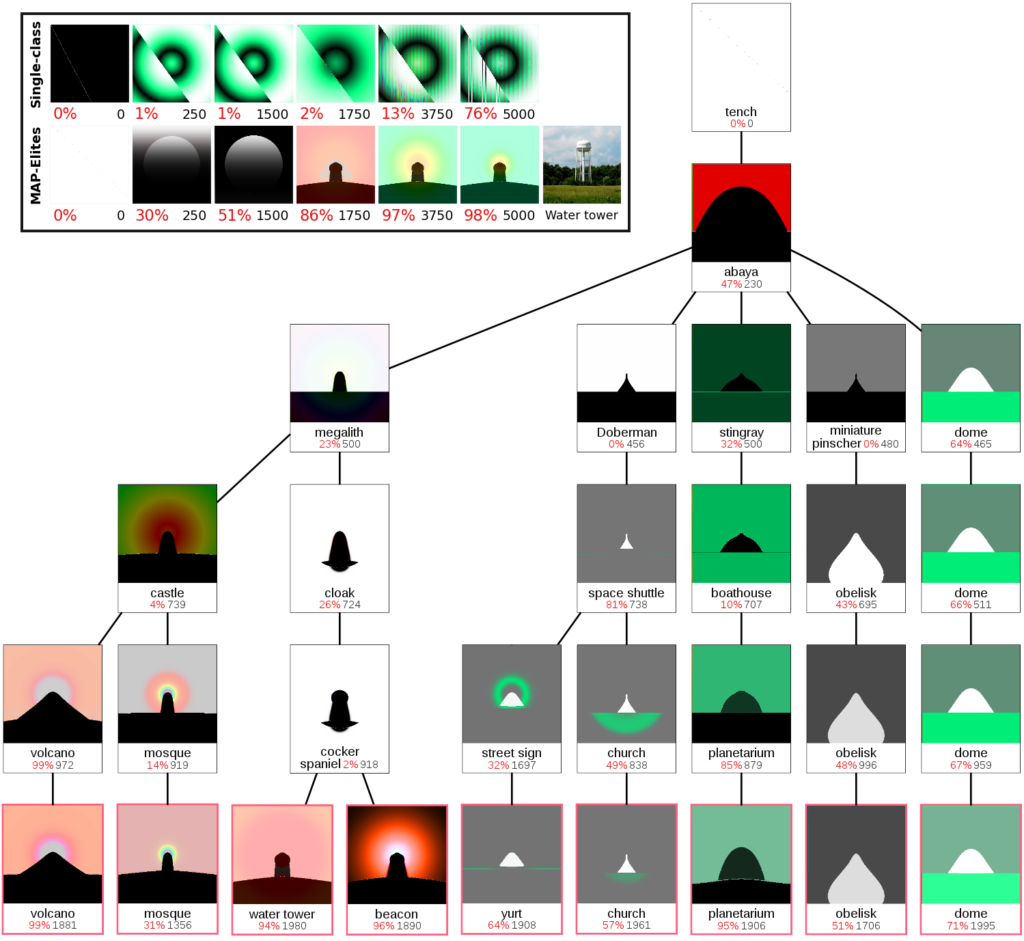

Figure 4: Inset Panel: The champions for the water tower class over evolutionary time for a single-class evolutionary algorithm (top) and the MAP-Elites variant of the Innovation Engine (bottom). Under each evolved image is the percent confidence the DNN has that the image is a water tower (left) and the generation in which the image was created (right). The single-class EA gets trapped on a local optima and refines the same basic idea, resulting in an unrecognizable image with mediocre performance. However, because MAP-Elites simultaneously evolves towards many objectives, often a lineage that is a champion of one class will produce an offspring that becomes a champion of a different class, a phenomenon we call goal switching. That occurs here at the 1750th generation, when the offspring of a champion of the cocker spaniel (dog) class (see main panel in this figure) becomes the best water tower produced so far. Its descendants are refined to produce a high-confidence, recognizable image. A water tower image from the training set is provided for reference.

Main Figure: A phylogenetic tree depicting how lineages evolve and goal switch from one class to another in an Innovation Engine (here, version 1.0 with MAP-Elites). Each image is displayed with the class the DNN placed it in, the associated DNN confidence score (red), and the generation in which it was created. Connections indicate ancestor-child relationships. One reason Innovation Engines work is because similar types of things (e.g. various building structures) can be produced by phylogenetically related genomes, meaning that the solution to one problem can be repurposed for a similar type of problem. Note the visual similarity between the related solutions. Another reason they work is because the path to a solution often involves a series of things that do not increasingly resemble the final solution (at least, not without the benefit of hindsight). For example, note the many unrelated classes that served as stepping stones to recognizable objects (e.g. the path through cloaks and cocker spaniels to arrive at a beacon).

Figure 5: To test the hypothesis that the CPPN fooling images could actually be considered art, we submitted a selection of them to a selective art contest: the “University of Wyoming 40th Annual Juried Student Exhibition”, which only accepted 35.5% of the submissions. Not only were the images accepted, but they were also amongst the 21.3% of submissions to be given an award. The work was then displayed at the University of Wyoming Art Museum.