The reasoning capabilities of Large Language Models (LLMs) have led to their increasing employment in several critical applications, particularly education, where they support problem-solving, tutoring, and personalized study. Chain-of-thought (CoT) reasoning capabilities [1, 2] are well-known to help LLMs decompose a problem into steps and explore the solution spaces more effectively, leading to impressive performance on mathematical and reasoning benchmarks. As the length of CoT tokens per question increases substantially to even thousands of tokens per question [ 1], it is unknown how users could comprehend LLM reasoning and detect errors or hallucinations. To address this problem and understand how reasoning can improve human-AI interaction, we present three new interactive reasoning interfaces: interactive CoT (iCoT), interactive Program-of-Thought (iPoT), and interactive Graph (iGraph). That is, we ask LLMs themselves to generate an interactive web interface wrapped around the original CoT content, which may be presented in text (iCoT), graphs (iGraph) or code (iPoT). This interface allows users to interact with and provide a novel experience in reading and validating the reasoning chains of LLMs. Across a study of 125 participants, interactive interfaces significantly improve user performance. Specifically, iGraph users score the highest error detection rate (85.6%), followed by iPoT (82.5%), iCoT (80.6%), all outperforming standard CoT (73.5%). Interactive interfaces also lead to faster user validation time-iGraph users are faster (57.9 secs per question) than the users of iCoT and iPoT (60 secs) and the standard CoT (64.7 secs). A post-study questionnaire shows that users prefer iGraph, citing its superior ability to enable them to follow the LLM’s reasoning. We discuss the implications of these results and provide recommendations for the future design of reasoning models.

🌟 Try the interactive demo here

Acknowledgment: This work is supported by the National Science Foundation under Grant No. 2145767, Adobe Research, and the NaphCare Charitable Foundation.

Conference: ACM IUI 2026 (acceptance rate: ~25%)

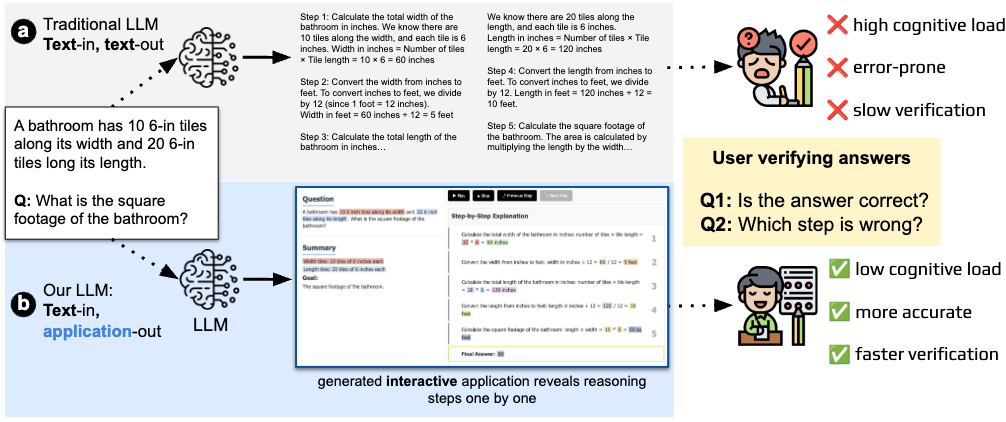

Figure 1: Given a GSM8K question, LLMs typically provide step-by-step reasoning followed by the final answer. However, such output presentation is often static and long, posing a higher cognitive load to users and leading to slower and more erroneous answer verification. In contrast, we prompt LLMs to generate an interactive HTML/JavaScript application wrapped around the reasoning. This interface enables users to verify the reasoning more efficiently via tools of (a) navigation buttons (inspired by those in common IDEs) and (b) colored highlights.

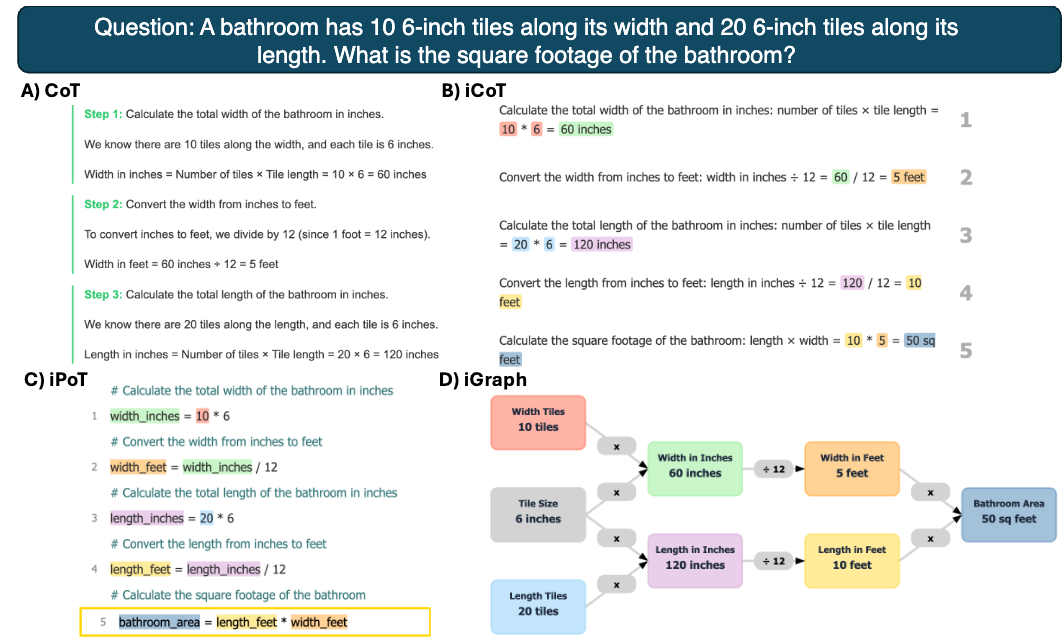

Figure 2: Examples of four explanation formats used in the study: (A) traditional Chain-of-Thought (CoT), (B) interactive Chain-of-Thought (iCoT), (C) interactive Program-of-Thought (iPoT), and (D) interactive Graph (iGraph). Each format presents the same reasoning steps in a different modality (textual, structured, code-like, or visual). For consistency, all four formats present the same mathematical problem.