Explaining artificial intelligence (AI) predictions is increasingly important and even imperative in many high-stakes applications where humans are the ultimate decision-makers. In this work, we propose two novel architectures of self-interpretable image classifiers that first explain, and then predict (as opposed to post-hoc explanations) by harnessing the visual correspondences between a query image and exemplars. Our models consistently improve (by +1 to +4 points) on out-of-distribution (OOD) datasets while performing marginally worse (by -1 to -2 points) on in-distribution tests than ResNet-50 and a k-nearest neighbor classifier (kNN). Via a large-scale, human study on ImageNet and CUB, our correspondence-based explanations are found to be more useful to users than kNN explanations. Our explanations help users more accurately reject AI’s wrong decisions than all other tested methods. Interestingly, for the first time, we show that it is possible to achieve complementary human-AI team accuracy (i.e., that is higher than either AI-alone or human-alone), in ImageNet and CUB image classification tasks.

Acknowledgment: This work is supported by the National Science Foundation under Grant No. 1850117 & 2145767, Adobe Research, and donations from the NaphCare foundation.

Conference: NeurIPS 2022 (acceptance rate: 25.3% out of 10,411 submissions)

5-min NeurIPS 2022 conference presentation:

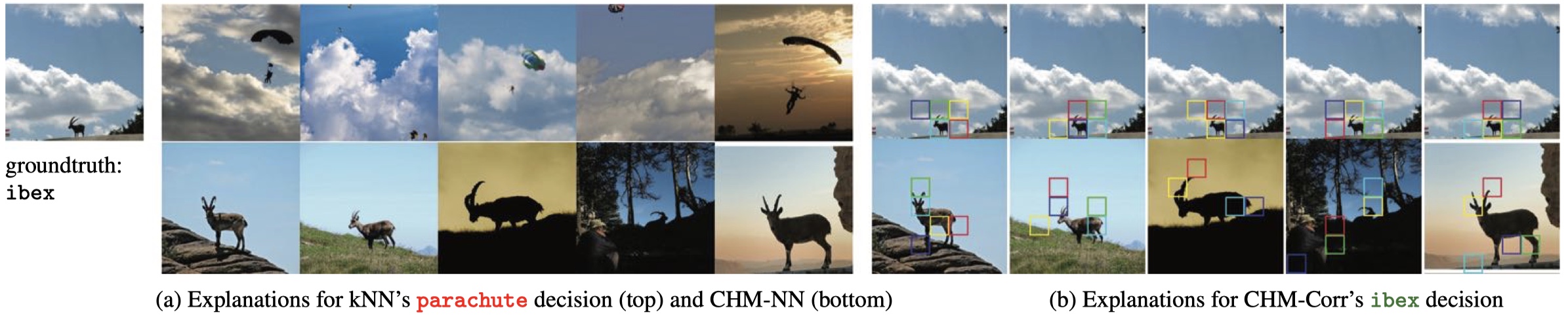

Figure 1: The ibex image is misclassified into parachute due to its similarity (clouds in blue sky) to parachute scenes (a). In contrast, CHM-Corr correctly labels the input as it matches ibex images mostly using the animal’s features, discarding the background information (b).

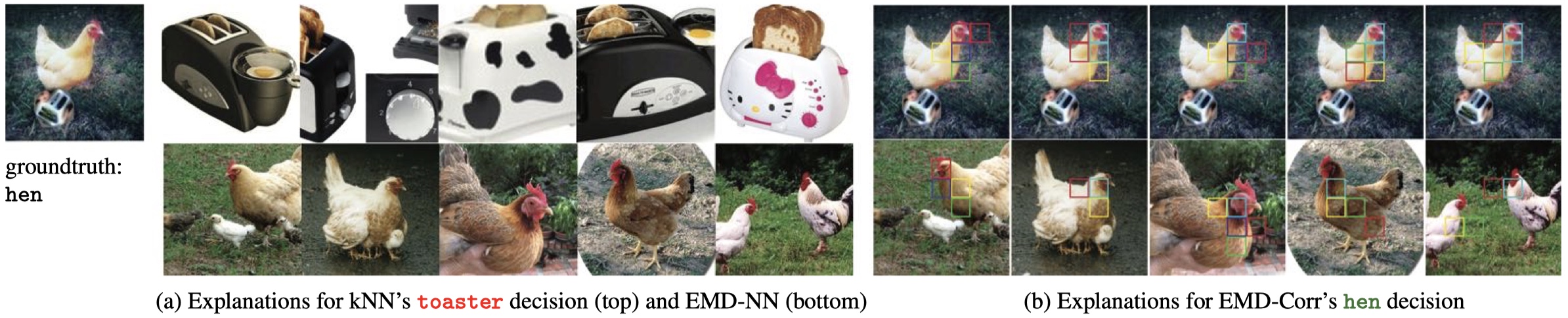

Figure 2: Operating at the image-level visual similarity, kNN incorrectly labels the input toaster due to the adversarial toaster patch (a). EMD-Corr instead ignores the adversarial patch and only uses the head and neck patches of the hen to make decisions (b).

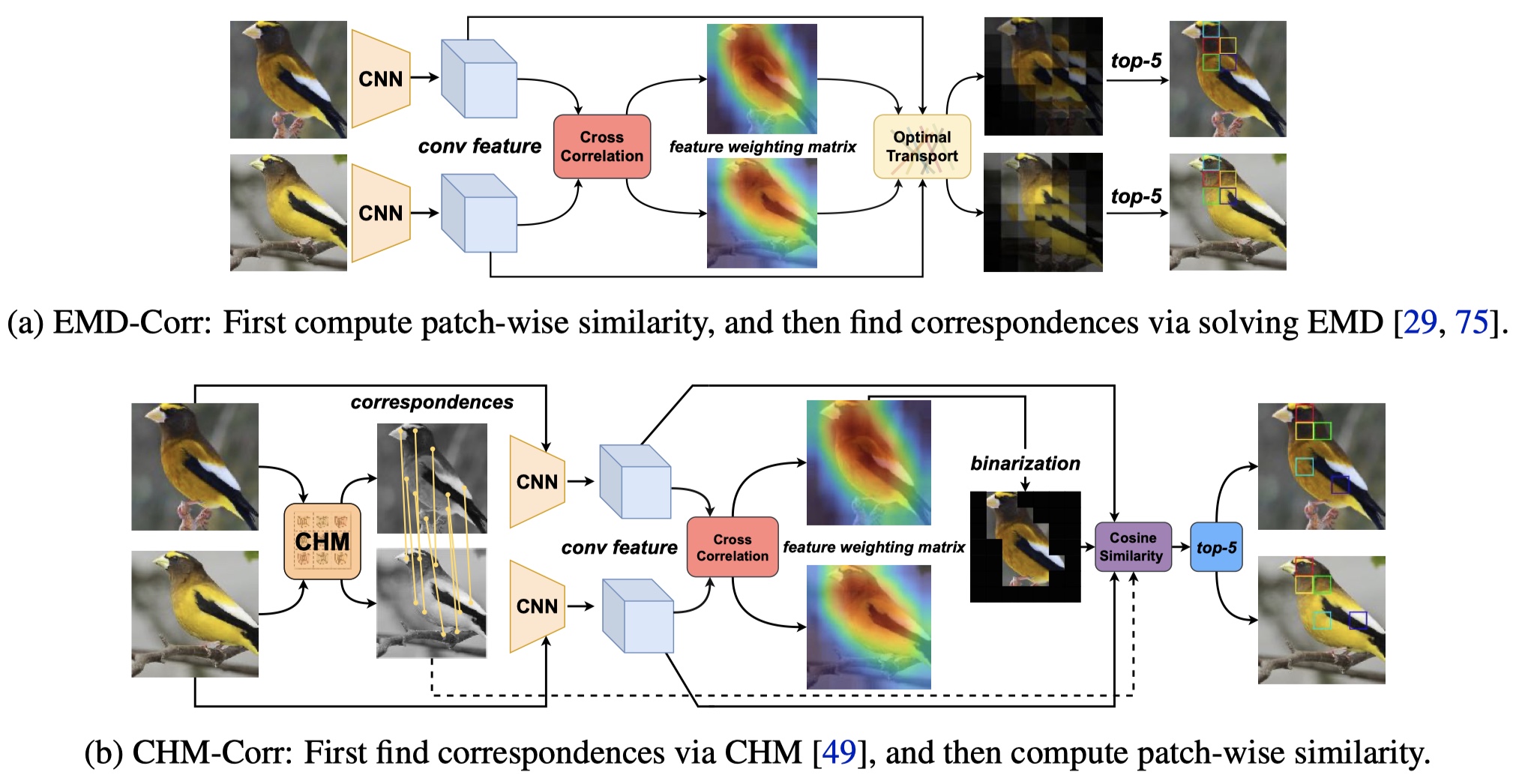

Figure 3: EMD-Corr and CHM-Corr both re-rank kNN’s top-50 candidates using the patch-wise similarity between the query and each candidate over the top-5 pairs of patches that are the most important and the most similar (i.e. highest EMD flows in EMD-Corr and highest cosine similarity in CHM-Corr).

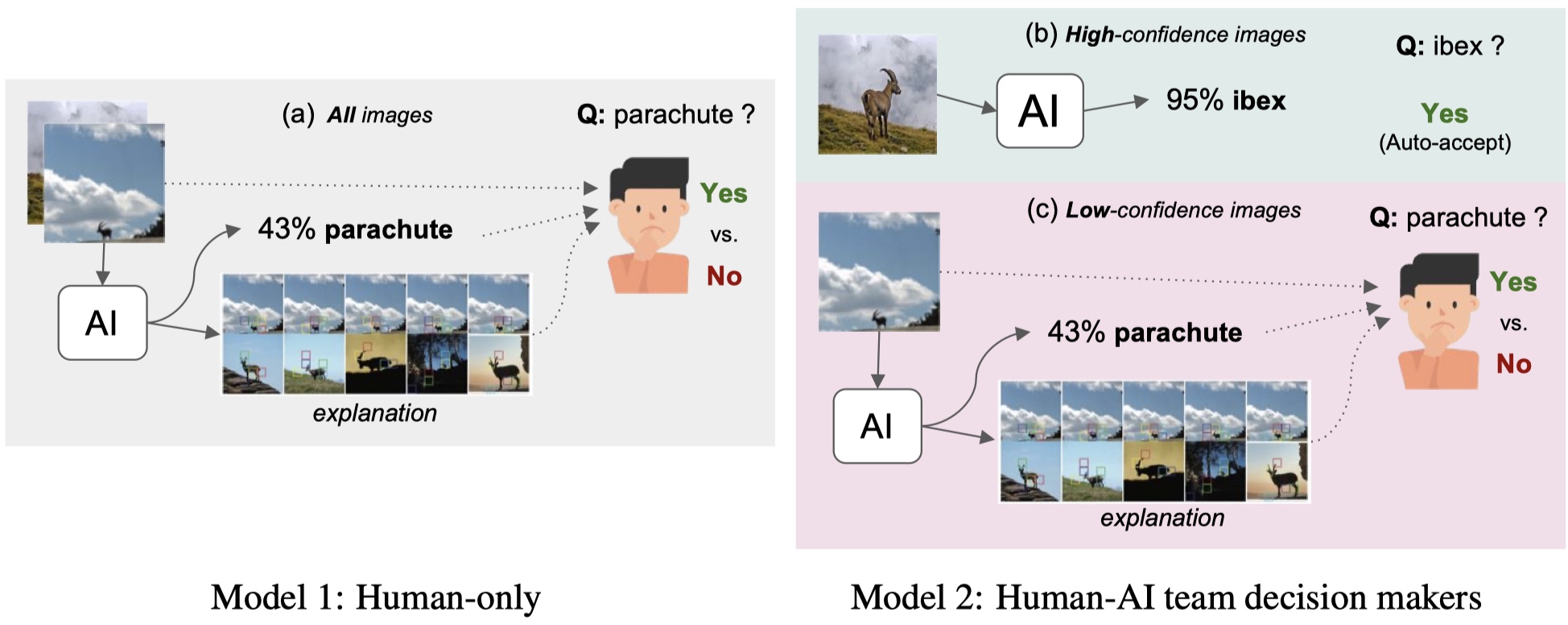

Figure 4: Two human-AI interaction models. In model 1 (a) , for all images, users decide (Yes/No) whether the AI’s predicted label is correct given the input image, AI top-1 label and confidence score, and explanations. In model 2, AI decisions are automatically accepted if AI is highly confident (b). Otherwise, humans will make decisions (c) in the same fashion as the model 1.

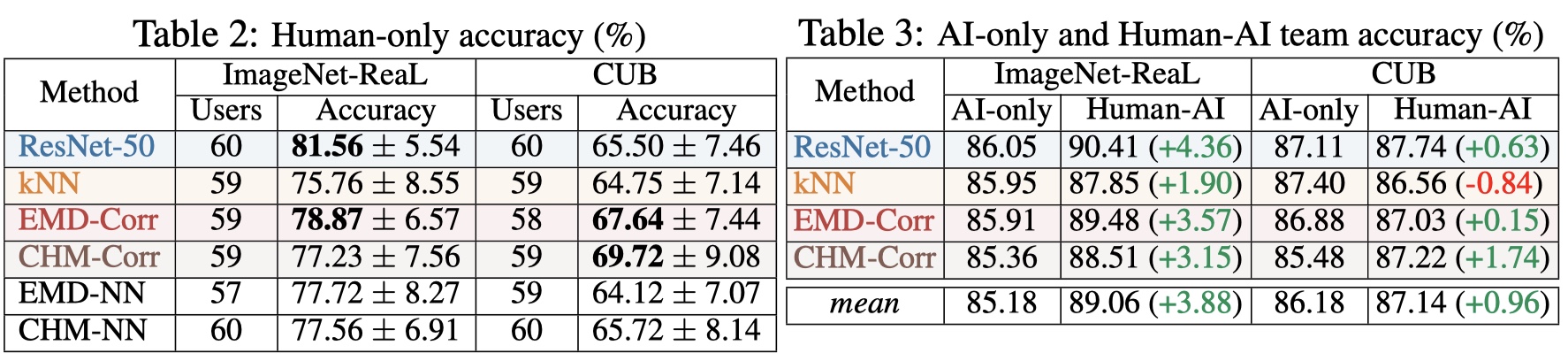

On ImageNet-ReaL, correspondence-based explanations are more useful to users than kNN explanations. On CUB fine-grained bird classification, correspondence-based explanations are the most useful to users, helping them to more accurately reject AI misclassification. Human-AI teams outperform both AIs alone and humans alone.

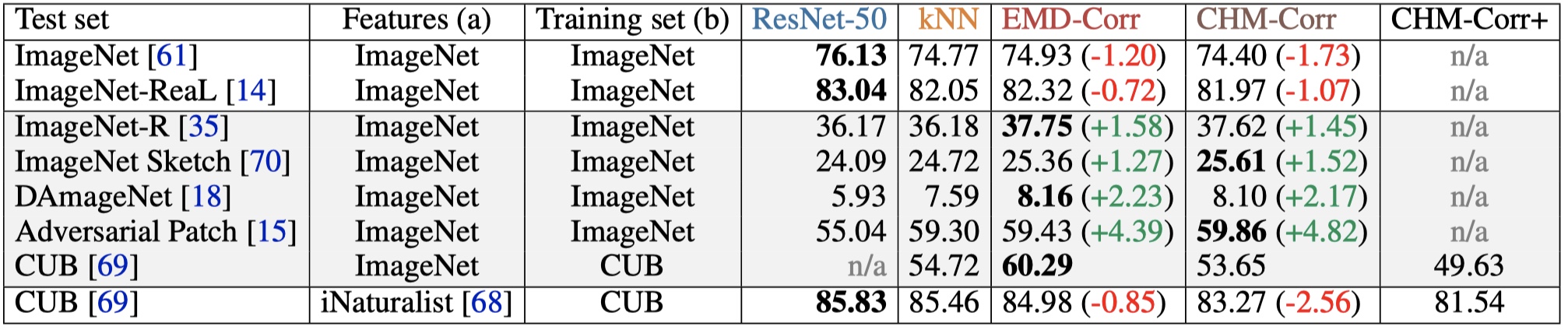

Table 1: Top-1 accuracy (%). ResNet-50 models’ classification layer is finetuned on a specified training set in (b). All other classifiers are non-parametric, nearest-neighbor models based on the pretrained ResNet-50 features (a) and retrieve neighbors from the training set (b) during testing. EMD-Corr and CHM-Corr outperform ResNet-50 models on all out-of-distribution datasets and slightly underperform on in-distribution sets.

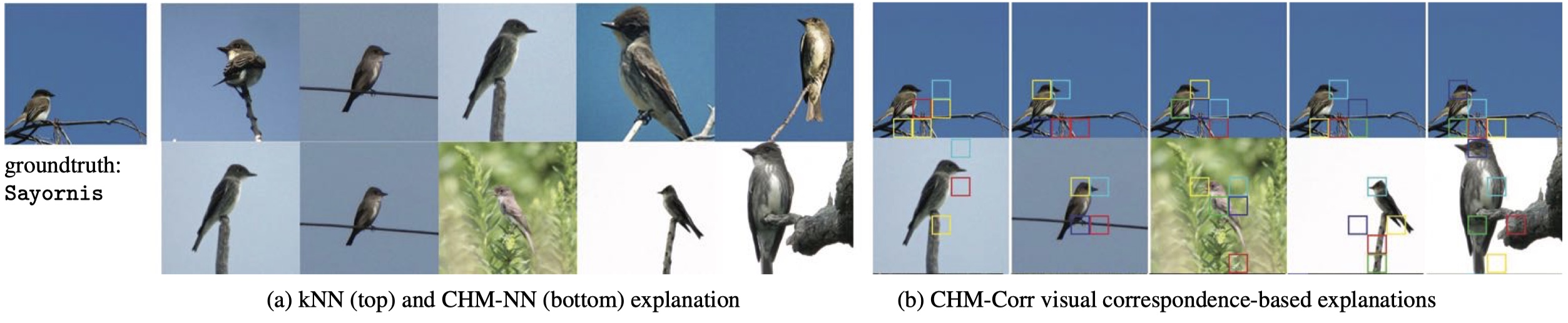

Figure 5: A Sayornis bird image is misclassified into Olive Sided Flycatcher by both kNN and CHM-Corr models. Yet, all 3/3 CHM-Corr users correctly rejected the AI prediction while 4/4 kNN users wrongly accepted. CHM-Corr explanations (b) show users more diverse samples and more evidence against the AI’s decision. More similar examples are in the paper.