We can better understand deep neural networks by identifying which features each of their neurons have learned to detect. To do so, researchers have created Deep Visualization techniques including activation maximization, which synthetically generates inputs (e.g. images) that maximally activate each neuron. A limitation of current techniques is that they assume each neuron detects only one type of feature, but we know that neurons can be multifaceted, in that they fire in response to many different types of features: for example, a grocery store class neuron must activate either for rows of produce or for a storefront. Previous activation maximization techniques constructed images without regard for the multiple different facets of a neuron, creating inappropriate mixes of colors, parts of objects, scales, orientations, etc. Here, we introduce an algorithm that explicitly uncovers the multiple facets of each neuron by producing a synthetic visualization of each of the types of images that activate a neuron. We also introduce regularization methods that produce state-of-the-art results in terms of the interpretability of images obtained by activation maximization. By separately synthesizing each type of image a neuron fires in response to, the visualizations have more appropriate colors and coherent global structure. Multifaceted feature visualization thus provides a clearer and more comprehensive description of the role of each neuron.

Conference: Visualization for Deep Learning workshop at ICML 2016. Best Paper Award and Best Student Paper Award.

Videos:

- Talk at ICML 2016

- Two-minute summary by Károly Zsolnai-Fehér’s et al.

Download high-quality visualizations of layer-8 units

Press coverage:

- Nvidia. Harnessing the Caffe Framework for Deep Visualization

- Stanford. ML lecture slides

Figure 1: Visualizing the different facets of a neuron that detects bell peppers. Diverse facets include a single, red bell pepper on a white background (1), multiple red peppers (5), yellow peppers (8), and green peppers on: the plant (4), a cutting board (6), or against a dark background (10). Center: training set Images from the bell pepper class are projected into two dimensions by t-SNE and clustered by k-means. Sides: synthetic images generated by multifaceted feature visualization for the “bell pepper” class neuron for each of the 10 numbered facets. Best viewed electronically, in color, with zoom.

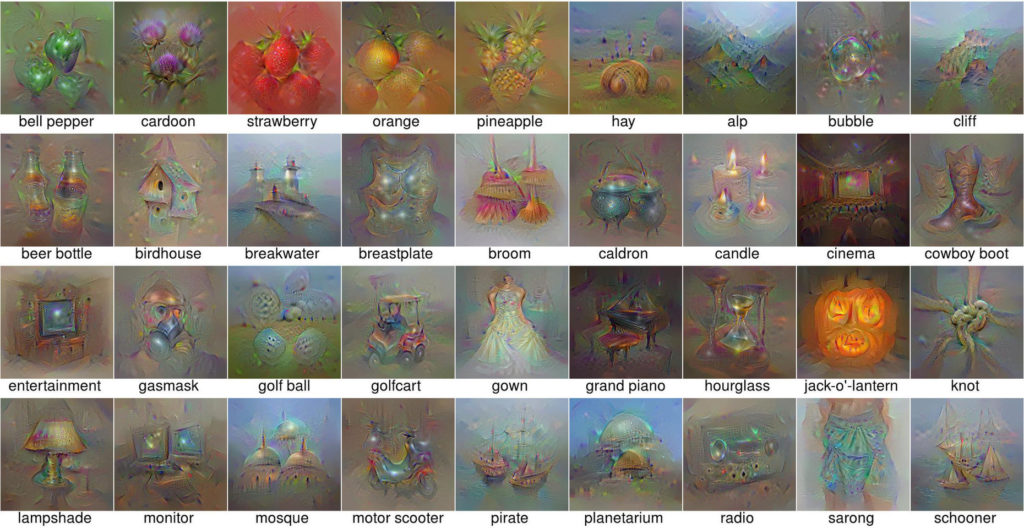

Figure 2: Visualizations of the facet with the most images for many example fc8 class neurons that showcase realistic color distributions and globally consistent objects. While subjective, we believe the improved color, detail, global consistency, and overall recognizability of these images represents the state of the art in visualization using activation maximization. Moreover, the improved quality of the images reveals that even at the highest-level layer, deep neural networks encode much more information about classes than was previously thought, such as the global structure, details, and context of objects. Best viewed in color with zoom.

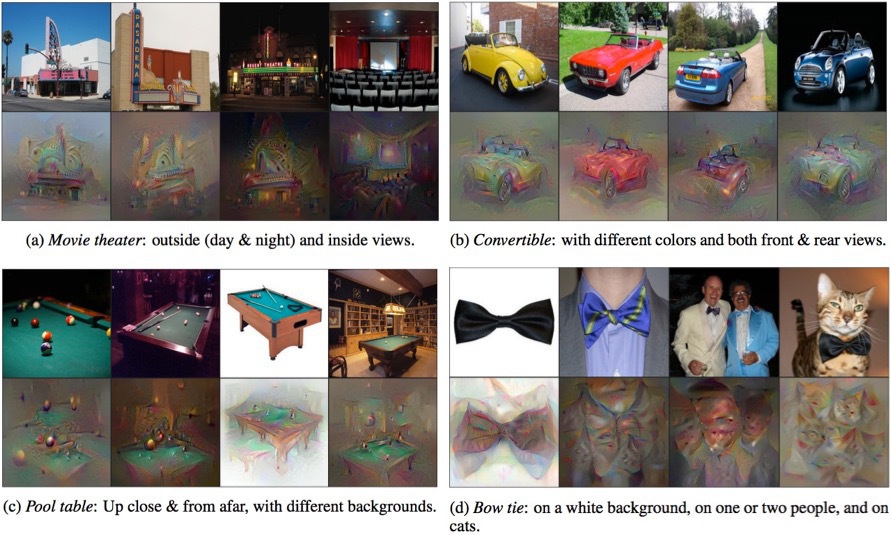

Figure 3: Multifaceted visualization of fc8 units uncovers interesting facets. We show 4 different facets for each neuron. In each pair of images, the bottom is the facet visualization that represents a cluster of images from the training set, and the top is the closest image to the visualization from the same cluster.

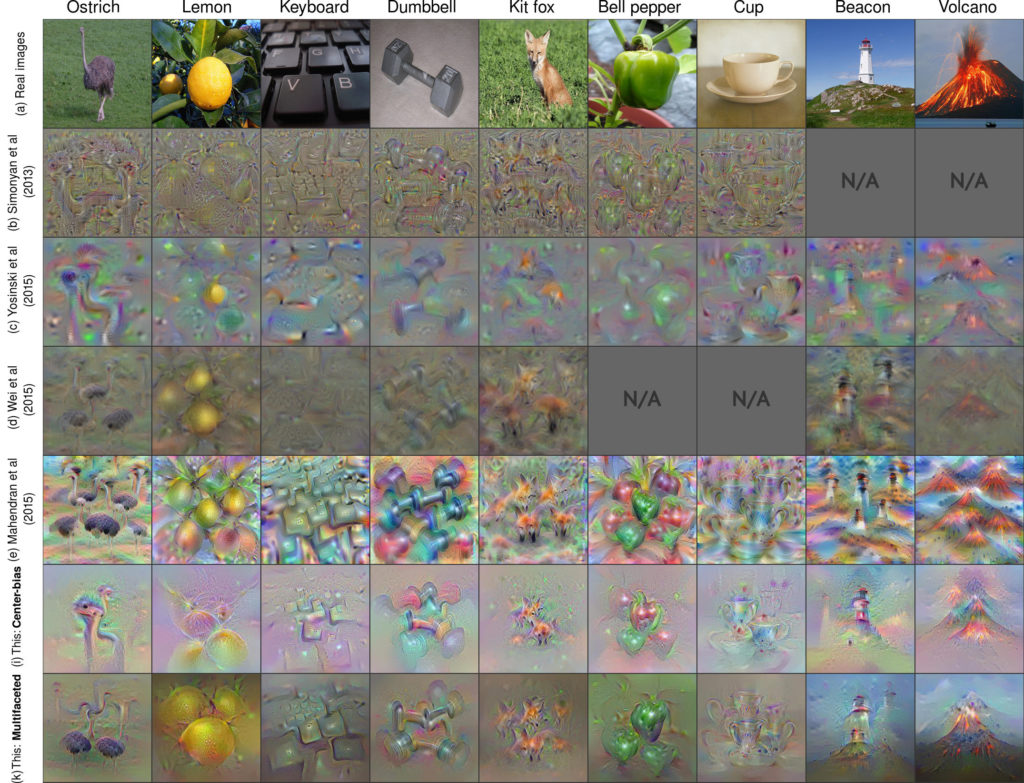

Figure 4: Comparing previous state-of-the-art activation maximization methods to the two new methods: (k) MFV and (i) center-biased regularization. To facilitate comparisons and avoid cherry-picking, we show classes available in previous papers Simonyan et al. 2013, Yosinski et al. 2015 and Wei et al 2015.