Improving zero-shot object-level change detection by incorporating visual correspondence

Hung Huy Nguyen, Pooyan Rahmanzadehgervi, Long Mai, Anh Totti Nguyen

WACV 2025

Links: pdf | code | project page

Detecting object-level changes between two images across possibly different views is a core task in many applications that involve visual inspection or camera surveillance. Existing change-detection approaches suffer from three major limitations: (1) lack of evaluation on image pairs that contain no changes, leading to unreported false positive rates; (2) lack of correspondences (i.e., localizing the regions before and after a change); and (3) poor zero-shot generalization across different domains. To address these issues, we introduce a novel method that leverages change correspondences (a) during training to improve change detection accuracy, and (b) at test time, to minimize false positives. That is, we harness the supervision labels of where an object is added or removed to supervise change detectors, improving their accuracy over previous work by a large margin. Our work is also the first to predict correspondences between pairs of detected changes using estimated homography and the Hungarian algorithm. Our model demonstrates superior performance over existing methods, achieving state-of-the-art results in change detection and change correspondence accuracy across both in-distribution and zero-shot benchmarks.

Acknowledgment: This work is supported by the National Science Foundation under Grant No. 2145767, Adobe Research, and the NaphCare Charitable Foundation.

![At an optimal confidence threshold, CYWS [25] (top row) sometimes still produces false positives—□ in (a) & (c)—and fails to detect changes (a). Dashed - - - boxes show groundtruth changes. First, we encourage detectors to be more aware of changes via a novel contrastive loss. Second, our Hungarian-based post-processing reduces false positives (a), improves change-detection accuracy (b), and estimates correspondences (c–d), i.e., paired changes such as (□, □) and (□, □). Our work (bottom row) is the first to estimate change correspondences compared to prior works [25, 26, 40] (top row).](http://anhnguyen.me/wp-content/uploads/2025/05/change-detection-teaser.jpg)

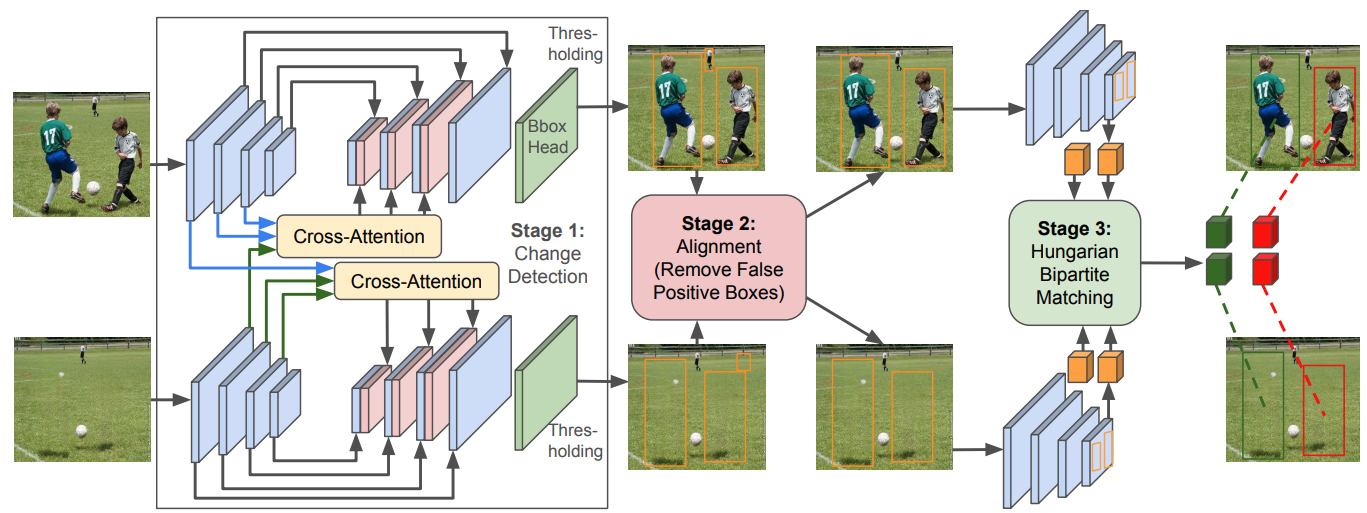

Figure 3. Our approach of detecting changes and predicting their correspondence. Our approach comprises three major stages. The first is the change detector, which we employ from the CYWS paper to identify changes between two images. The second is the alignment step, where an ideal detection threshold is established before forwarding anticipated boxes to the alignment process, aiding in the removal of false positive predicted boxes. The third is the matching algorithm, which takes the output from the alignment step to determine the correspondence between each pair of changes between the two images.

Table 1: Change correspondence F1 Score. We examine the matching score in two scenarios—one with alignment and the other without—between our model and CYWS model. Our model performs better than CYWS model in two scenarios.

![At an optimal confidence threshold, CYWS [25] (top row) sometimes still produces false positives—□ in (a) & (c)—and fails to detect changes (a). Dashed - - - boxes show groundtruth changes. First, we encourage detectors to be more aware of changes via a novel contrastive loss. Second, our Hungarian-based post-processing reduces false positives (a), improves change-detection accuracy (b), and estimates correspondences (c–d), i.e., paired changes such as (□, □) and (□, □). Our work (bottom row) is the first to estimate change correspondences compared to prior works [25, 26, 40] (top row).](https://anhnguyen.me/wp-content/uploads/2025/05/change-detection-teaser-1024x564.jpg)