Explaining how important each input feature is to a classifier’s decision is critical in high-stake applications. An underlying principle behind dozens of explanation methods is to take the prediction difference between before-and-after an input feature (here, a token) is removed as its attribution—the individual treatment effect in causal inference. A recent method called Input Marginalization (IM) (Kim et al. 2020; Harbecke and Alt, 2020) uses BERT to replace a token—simulating the do(.) operator—yielding more plausible counterfactuals. However, our rigorous evaluation using five metrics and on three datasets found IM explanations to be consistently more biased, less accurate, and less plausible than those derived from simply deleting a word.

Acknowledgment: This work is supported by the National Science Foundation under Grant No. 1850117, Adobe Research, and the NaphCare Charitable Foundation.

Conference: The Asia-Pacific Chapter of the Association for Computational Linguistics. AACL IJCNLP 2022. Oral presentation (acceptance rate: 63/554 = 11.4%).

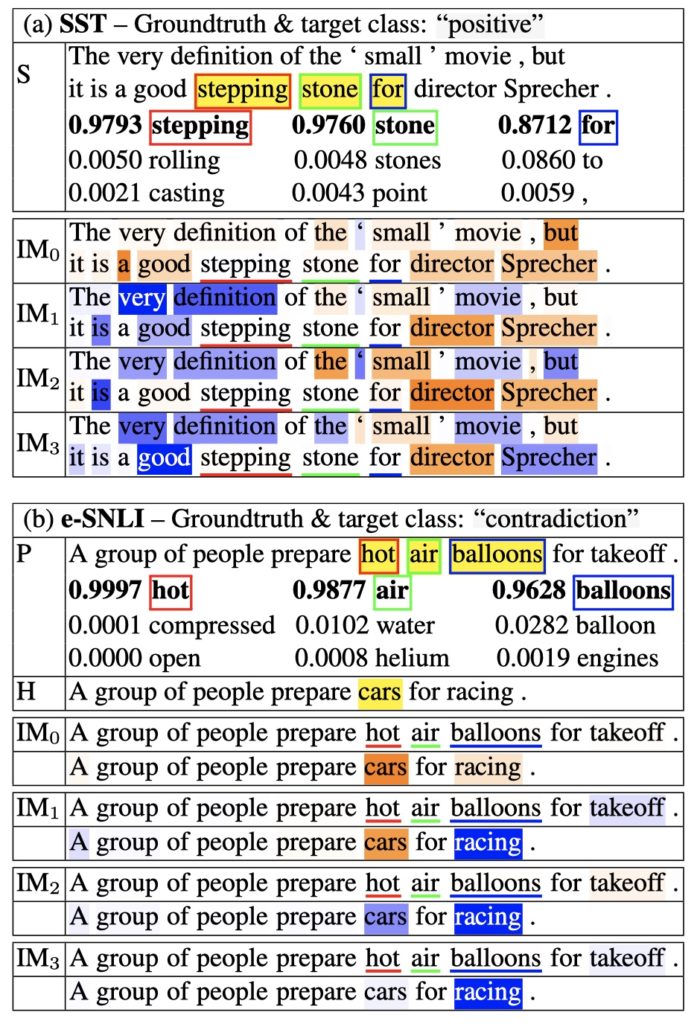

Figure 1: Many words labeled as important by humans such as “stepping“, “stone” (in SST) or “hot“, “air” (in e-SNLI) are deemed unimportant by Input Marginalization (IM$_0$). Here, the color map is: negative -1, neutral 0, positive +1. Even when randomizing the classifier’s weights three times, the IM attribution of these words remains unchanged at near zero (IM$_1$ to IM$_3$). That is, these words are highly predictable by BERT (e.g. 0.9793 for stepping). Therefore, when marginalizing over the top-$k$ BERT candidates (e.g. “stepping”, “rolling”, “casting”), the IM attribution for these low-entropy words tends to zero, leading to heatmaps that are (1) biased, (2) (2) less accurate, and (3) less plausible than those of Leave-One-Out (LOO empty).

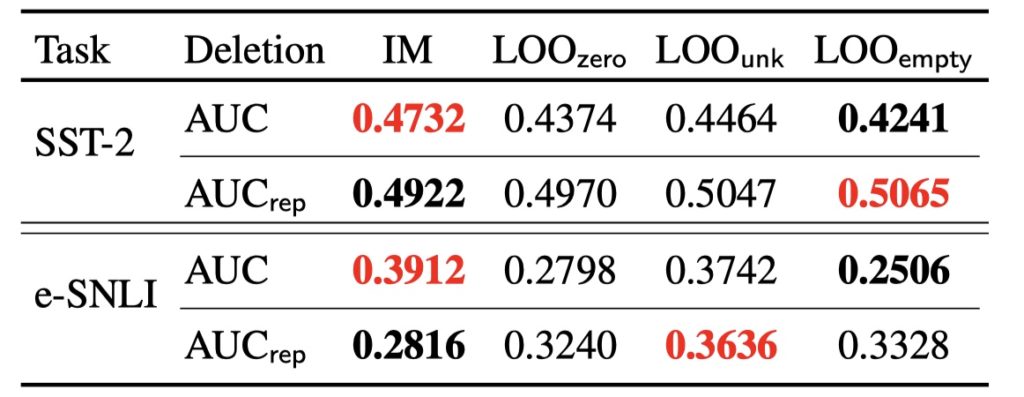

Figure 2: Aligned with Kim et al. (2020), IM is the best method, outperforming LOO baselines under AUC rep . In contrast, IM is also the worst method under AUC. For both metrics, lower is better.

Figure 3: Both LOO and IM substantially outperform the random baseline (c). However, on average, LOO outperforms IM across both ROAR and ROAR-BERT variant (that uses BERT to replace top-N tokens instead of deleting them).

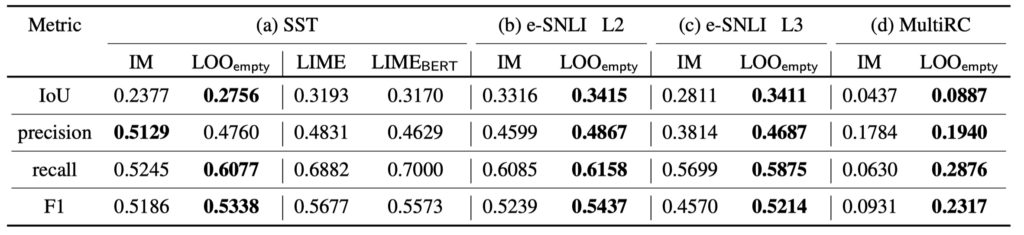

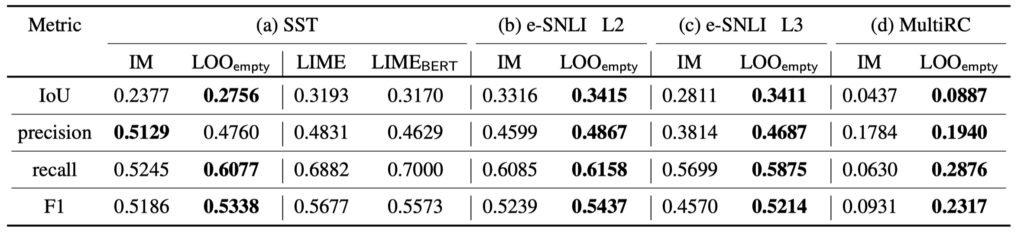

Figure 4: Compared to IM, LOO is substantially more consistent with human annotations over all three datasets. Note that the gap between LOO empty and IM is ∼3× wider when comparing AMs with the e-SNLI tokens that at least three annotators label “important” (i.e. L3), compared to L2 (higher is better). LIME BERT explanations are slightly less consistent with human highlights than those of LIME despite their counterfactuals being more realistic.