The effectiveness of feature attribution methods and its correlation with automatic evaluation scores

Giang Nguyen, Daeyoung Kim, Anh Nguyen

Links: pdf | code | project page

Explaining the decisions of an Artificial Intelligence (AI) model is increasingly critical in many real-world, high-stake applications. Hundreds of papers have either proposed new feature attribution methods, discussed or harnessed these tools in their work. However, despite humans being the target end-users, most attribution methods were only evaluated on proxy automatic-evaluation metrics (Zhang et al. 2018; Zhou et al. 2016; Petsiuk et al. 2018). In this paper, we conduct the first user study to measure attribution map effectiveness in assisting humans in ImageNet classification and Stanford Dogs fine-grained classification, and when an image is natural or adversarial (i.e., contains adversarial perturbations). Overall, feature attribution is surprisingly not more effective than showing humans nearest training-set examples. On a harder task of fine-grained dog categorization, presenting attribution maps to humans does not help, but instead hurts the performance of human-AI teams compared to AI alone. Importantly, we found automatic attribution-map evaluation measures to correlate poorly with the actual human-AI team performance. Our findings encourage the community to rigorously test their methods on the downstream human-in-the-loop applications and to rethink the existing evaluation metrics.

Acknowledgment: This research was supported by the MSIT Grant No. IITP-2021-2020-0-01489, the Technology Innovation Program of MOTIE Grant No. 2000682, NSF Grant No. 1850117, and a donation from NaphCare Foundation.

Conference: NeurIPS 2021 (acceptance rate: 26% out of 9,122 submissions)

7-min NeurIPS 2021 conference presentation:

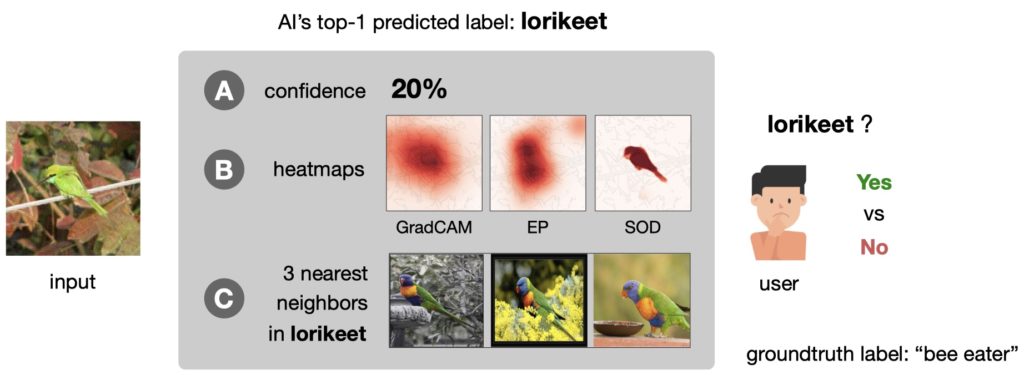

Figure 1: Given an input image, its top-1 predicted label (here, lorikeet) and confidence score (A), we asked the user to decide Yes or No whether the predicted label is accurate (here, the correct answer is No). The accuracy of users in this case is the performance of the human-AI team without visual explanations. We also compared this baseline with the treatments where one attribution map (B) or a set of three nearest neighbors (C) is also provided to the user (in addition to the confidence score).

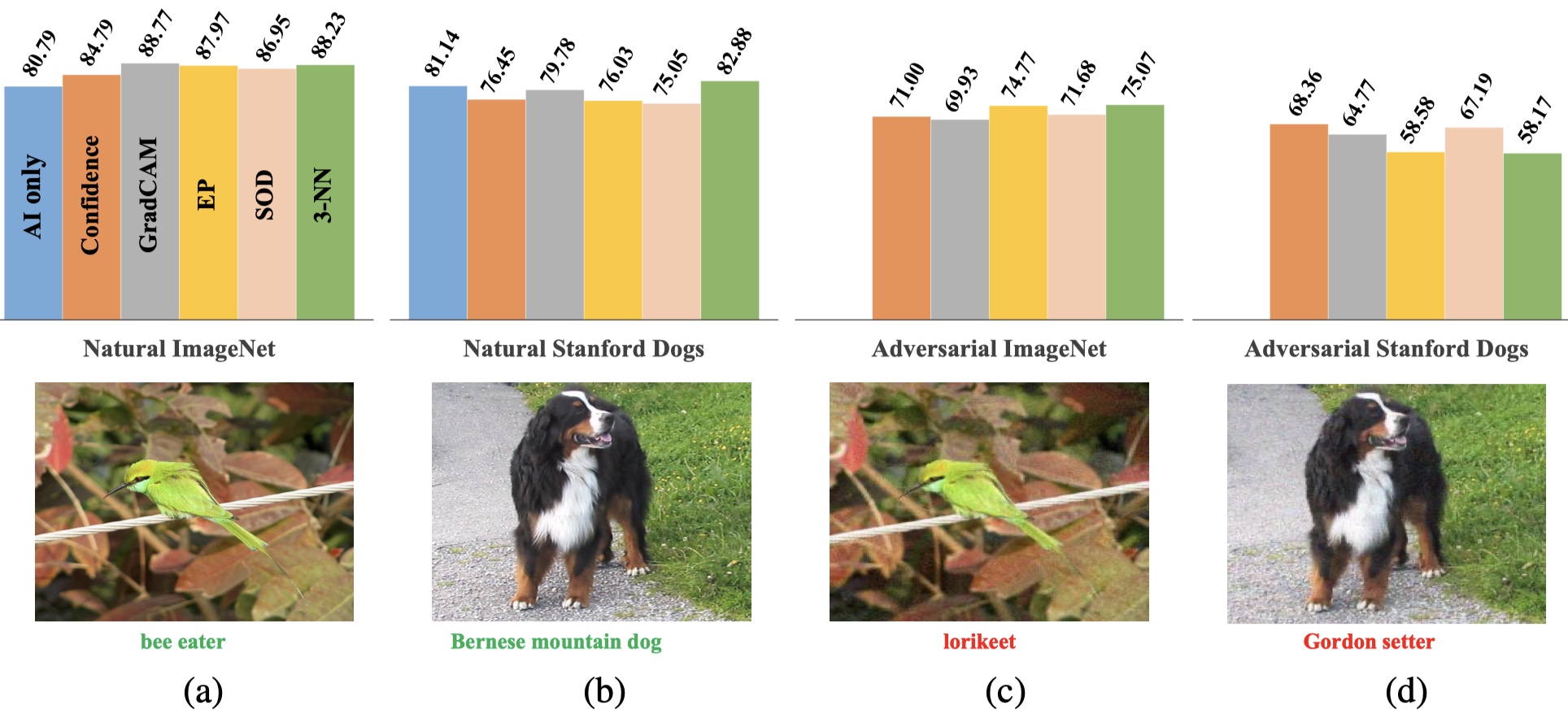

Figure 2: 3-NN is consistently among the most effective in improving human-AI team accuracy (%) on natural ImageNet images (a), natural Dog images (b), and adversarial ImageNet images (c). On the challenging adversarial Dogs images (d), using confidence scores only helps humans the most in detecting AI’s mistakes compared to using confidence scores and one visual explanation. Below each sample dataset image is the top-1 predicted label (which was correct or wrong) from the classifier.

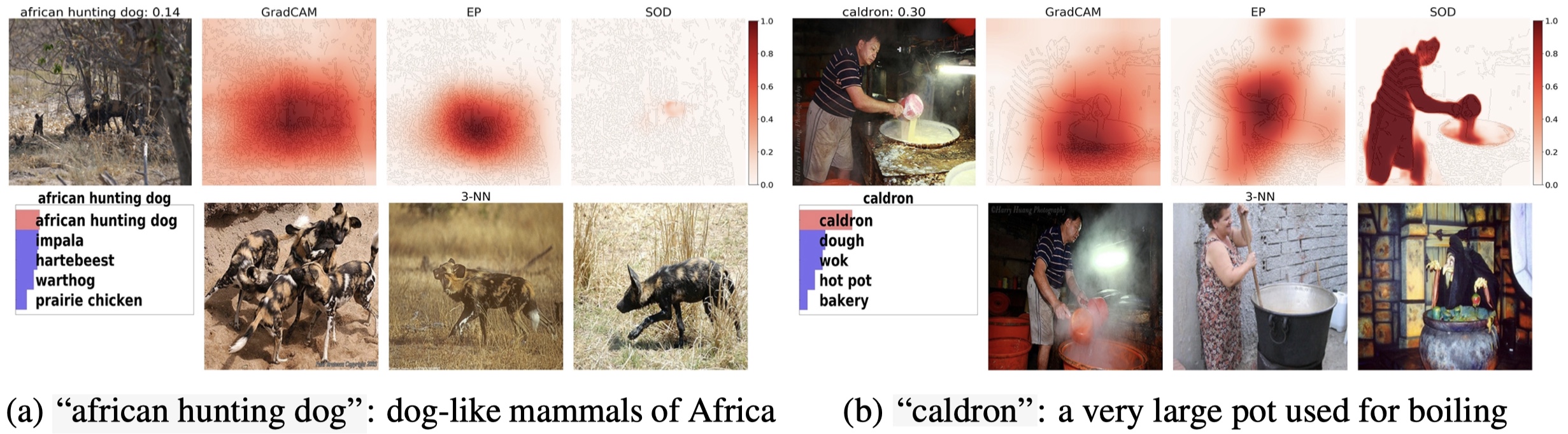

Figure 3: Hard images that were only corrected accepted by 3-NN users but not other users of GradCAM, EP, or SOD. We show the input image, and top-5 classification outputs and three heatmaps (GradCAM, EP, SOD), and 3-NN images. Despite the animals in the input image are partially occluded, 3-NN provided closed-up examples of african hunting dogs, enabling users to correctly decide the label (a). Choosing a single label for a scene of multiple objects is challenging. However, 3-NN was able to retrieve a nearest example showing a very similar scene enabling users to accept AI’s correct decisions (b).

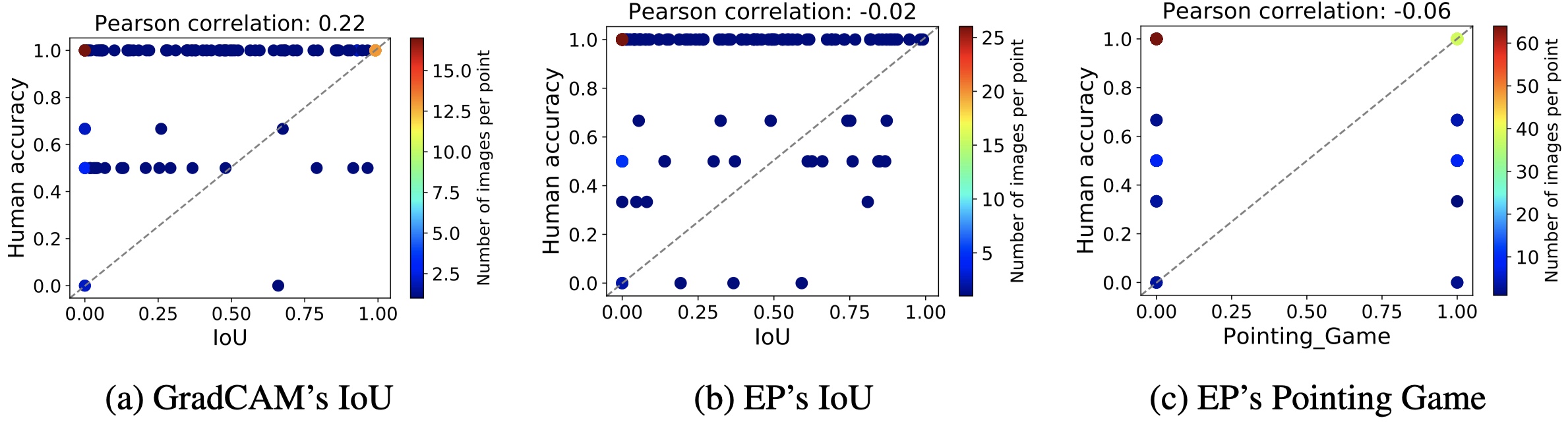

Figure 4: The ImageNet localization performance (here, under IoU vs. human-annotated bounding boxes) of GradCAM (Selvaraju et al. 2017) and EP (Fong et al. 2019) attribution maps poorly correlate with the human-AI team accuracy (y-axis) when users use these heatmaps in image classification. Humans often can make correct decisions despite that heatmaps poorly localize the object (see the range 0.0–0.2 on x-axis).

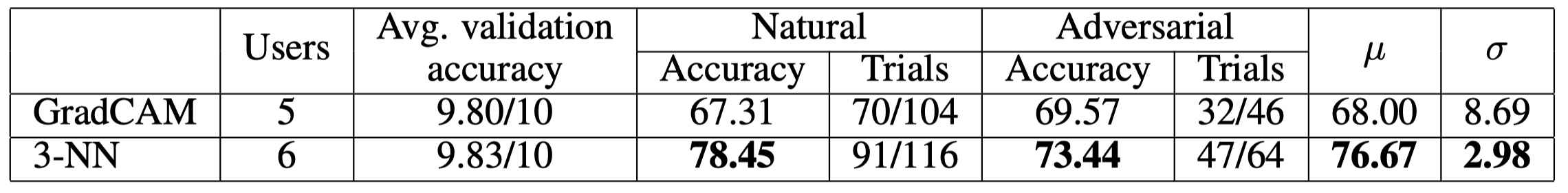

Table 1: 3-NN is far more effective than GradCAM in human-AI team image classification of both natural and adversarial images.

See the mean ($\mu$) and std ($\sigma$) in per-user accuracy over all images.