Deep Neural Network are Easily Fooled: High Confidence Predictions for Unrecognizable Images

Anh Nguyen, Jason Yosinski, Jeff Clune

Links: pdf | code | project page

Deep neural networks (DNNs) have recently been achieving state-of-the-art performance on a variety of pattern-recognition tasks, most notably visual classification problems. Given that DNNs are now able to classify objects in images with near-human-level performance, questions naturally arise as to what differences remain between computer and human vision. A recent study (Szegedy et al, 2014) revealed that changing an image (e.g. of a lion) in a way imperceptible to humans can cause a DNN to label the image as something else entirely (e.g. mislabeling a lion a library). Here we show a related result: it is easy to produce images that are completely unrecognizable to humans, but that state-of-the-art DNNs believe to be recognizable objects with 99.99% confidence (e.g. labeling with certainty that white noise static is a lion). Specifically, we take convolutional neural networks trained to perform well on either the ImageNet or MNIST datasets and then find images with evolutionary algorithms or gradient ascent that DNNs label with high confidence as belonging to each dataset class. It is possible to produce images totally unrecognizable to human eyes that DNNs believe with near certainty are familiar objects, which we call “fooling images” (more generally, fooling examples). Our results shed light on interesting differences between human vision and current DNNs, and raise questions about the generality of DNN computer vision.

Conference: CVPR 2015. Oral presentation (3.3% acceptance rate)

Awards:

- Community Top Paper Award (among 3 out of 2103 papers to be awarded)

- 63rd most influential paper worldwide in 2015 via AltMetric.

- Best Long Research video & Most Educational Research video at IJCAI 2015

- Best Research video at AAAI 2016

Press coverage:

- Nature. Can we open the black box of AI?

- American Scientists. Computer Vision and Computer Hallucinations

- The Economist. Rise of the machines

- MIT Technology Review. “Smart” Software Can Be Tricked into Seeing What Isn’t There

- Scientific American Mind. Teaching machines to see

- The Atlantic. How to Fool a Computer With Optical Illusions

Videos: 5-min summary | Talk at CVPR

Downloads: High-quality images from the paper | 10,000 fooling CPPN images

A 5-min video summary of the paper.

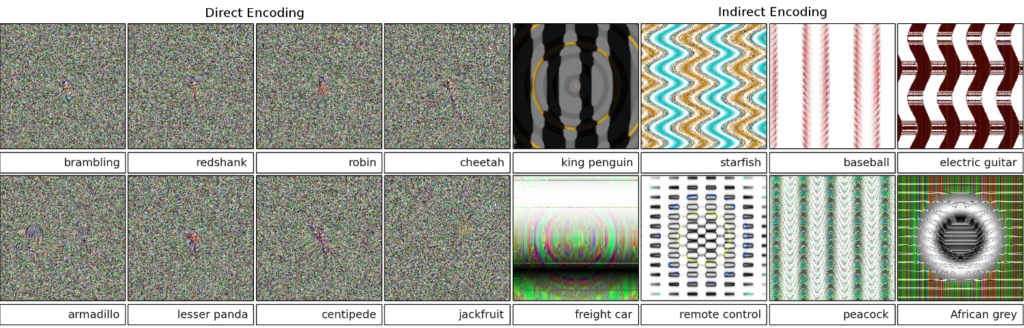

Figure 1: Evolved images that are unrecognizable to humans, but that state-of-the-art DNNs trained on ImageNet believe with >= 99.6% certainty to be a familiar object. This result highlights differences between how DNNs and humans recognize objects.

Left: Directly encoded images. Right: Indirectly encoded images.

Figure 2: Evolving images to match DNN classes produces a tremendous diversity of images. The mean DNN confidence scores for these images is 99.12% for the listed class, meaning that the DNN believes with near-certainty that the image is that type of thing. Shown are images selected to showcase diversity from 5 independent evolutionary runs. The images shed light on what the DNN network cares about, and what it does not, when classifying an image. For example, a school bus is alternating yellow and black lines, but does not need to have a windshield or wheels.

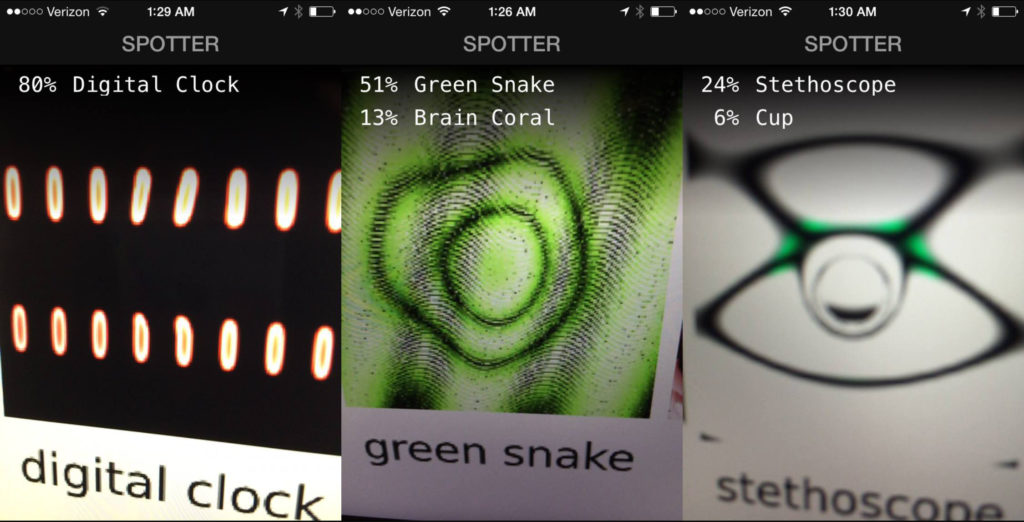

Figure 3: Dileep George told us (via Alexander Terekhov) that he pointed an image recognition iPhone app powered by Deep Learning at our “fooling images” displayed on a computer screen and the iPhone/app was equally fooled! That’s very interesting given the different lighting, angle, camera lens, etc. It shows how robustly the DNN feels these images are the genuine articles.

More info: including FAQs here