Understanding Generative AI Capabilities in Everyday Image Editing Tasks

Mohammad Reza Taesiri, Brandon Collins, Logan Bolton, Viet Dac Lai, Franck Dernoncourt, Trung Bui, Anh Totti Nguyen

2025

Links: pdf | code | project page

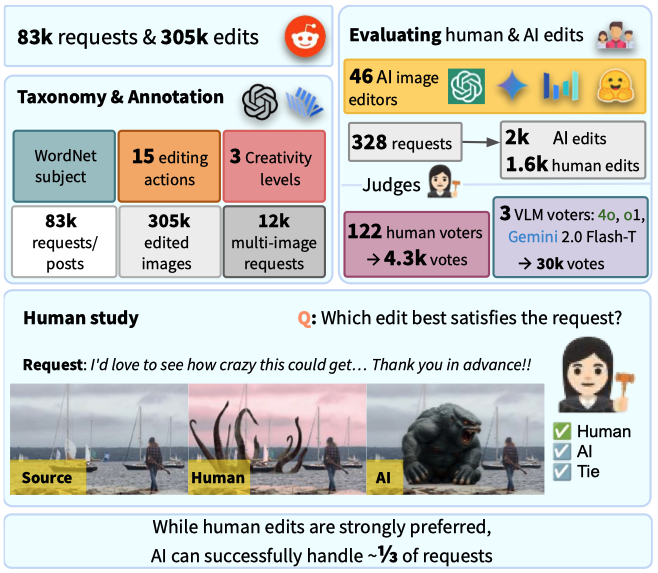

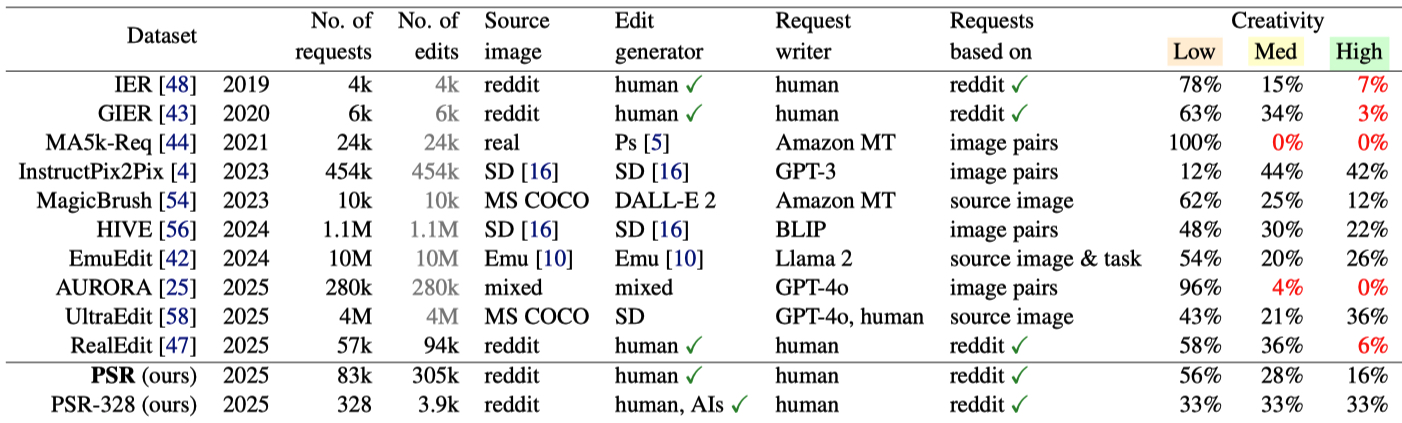

Generative AI (GenAI) holds significant promise for automating everyday image editing tasks, especially following the recent release of GPT-4o on March 25, 2025. However, what subjects do people most often want edited? What kinds of editing actions do they want to perform (e.g., removing or stylizing the subject)? Do people prefer precise edits with predictable outcomes or highly creative ones? By understanding the characteristics of real-world requests and the corresponding edits made by freelance photo-editing wizards, can we draw lessons for improving AI-based editors and determine which types of requests can currently be handled successfully by AI editors? In this paper, we present a unique study addressing these questions by analyzing 83k requests from the past 12 years (2013-2025) on the Reddit community, which collected 305k PSR-wizard edits. According to human ratings, approximately only 33% of requests can be fulfilled by the best AI editors (including GPT-4o, Gemini-2.0-Flash, SeedEdit). Interestingly, AI editors perform worse on low-creativity requests that require precise editing than on more open-ended tasks. They often struggle to preserve the identity of people and animals, and frequently make non-requested touch-ups. On the other side of the table, VLM judges (e.g., o1) perform differently from human judges and may prefer AI edits more than human edits. Code and qualitative examples are available at: https://psrdataset.github.io.

Acknowledgment: This work is supported by the National Science Foundation under Grant No. 2145767, Adobe Research, and the NaphCare Charitable Foundation.

Links:

- PSR-328 results: All rated pairs including requests including images, textual requests, Photoshop-wizard edits, AI edits, and ratings.

- PSR dataset (83k requests from /r/photoshoprequest)

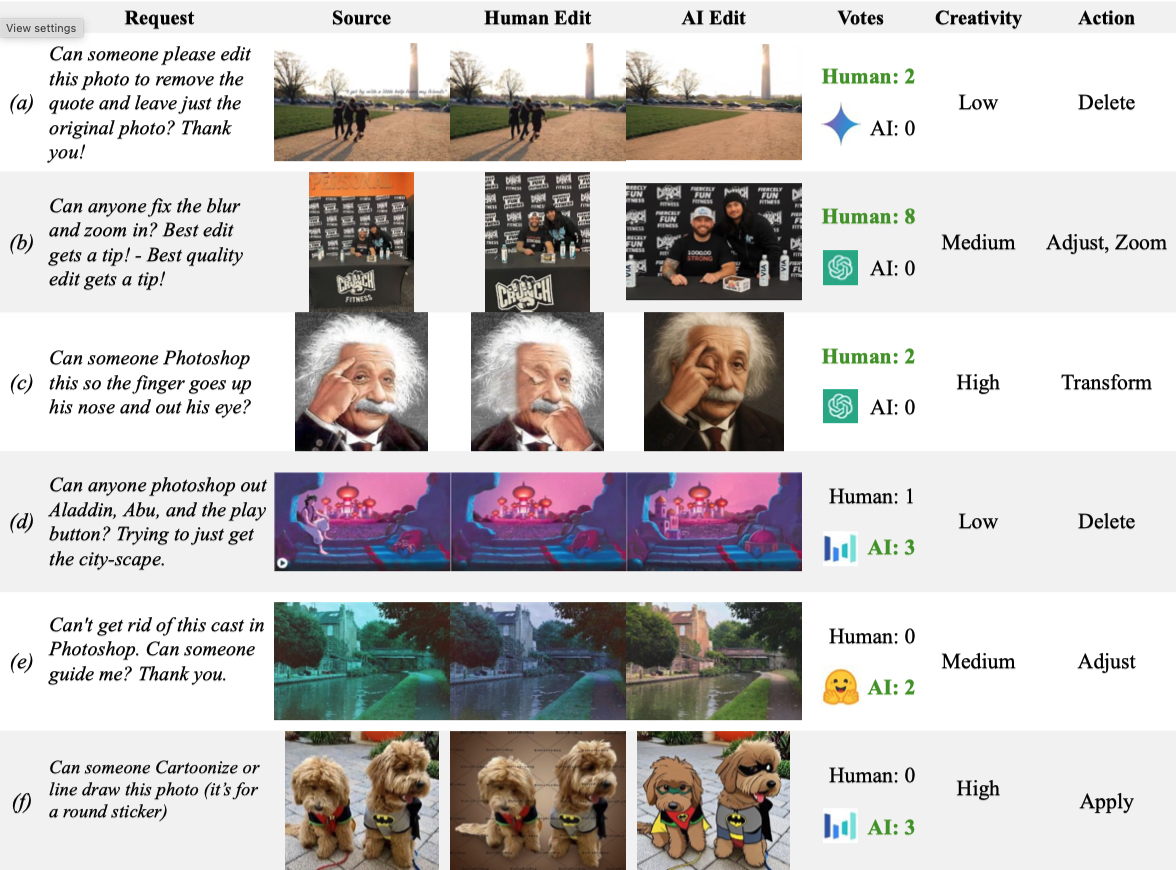

Figure 1: We propose PSR, the largest dataset of real-world image-editing requests and human-made edits. PSR enables the community (and our work) to identify types of requests that can be automated using existing AIs and those that need improvement. PSR is the first dataset to tag all requests with WordNet subjects, real-world editing actions, and creativity levels.

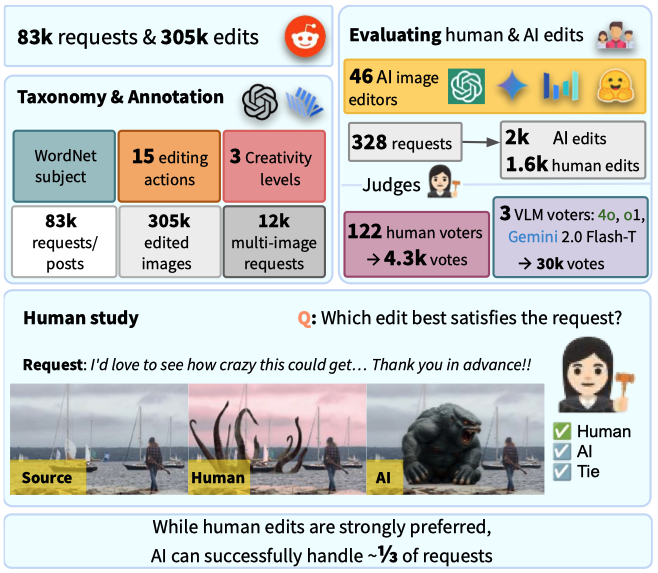

Figure 2: Human reviewers are asked to choose one edit (which is created by either Photoshop wizards or AI editors) or Tie.

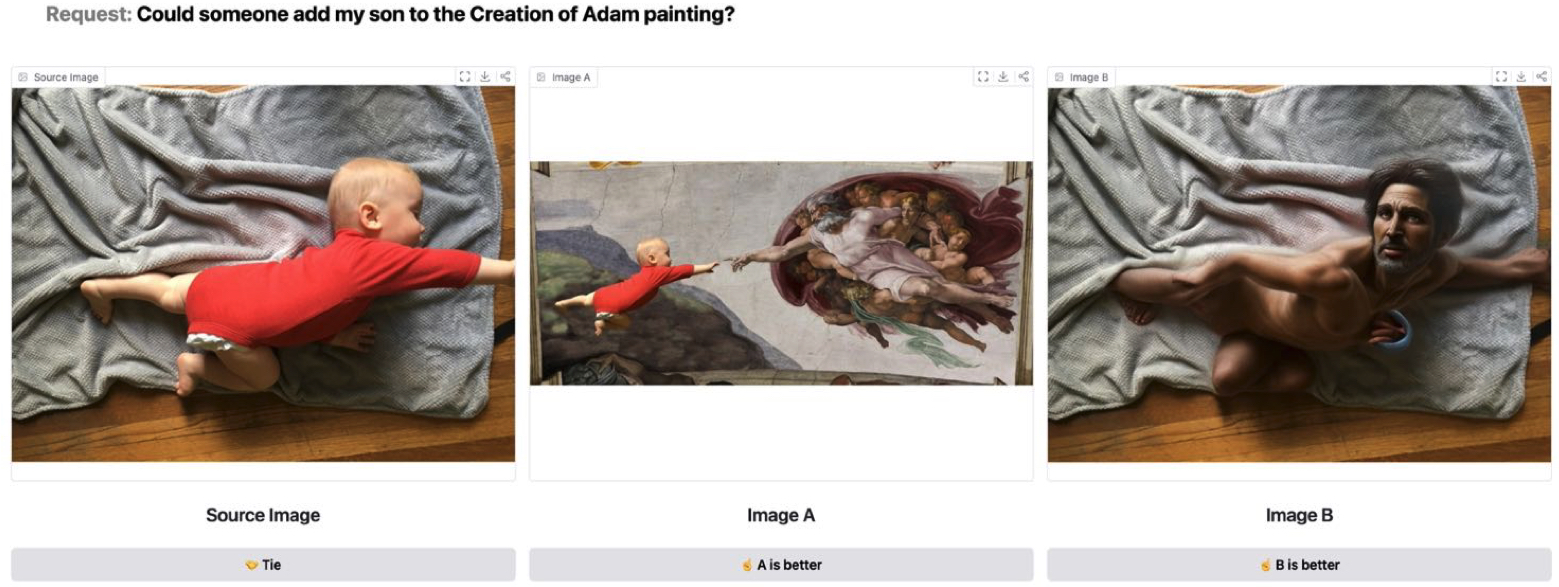

Figure 3: PSR is the largest-scale dataset of real-world requests and PSR-wizard edits.

Figure 4: Example cases from PSR dataset where Photoshop-wizard edits were preferred by human raters over the AI edits (a-c) and samples where AI edits was preferred (d-f). (a) The human edit completes the request, but the AI edit removes the people, which was not requested. (b) The human edit completes the request, but the AI edit generates a similar image with people resembling those in the source image, although with different identities. (c) The human edit completes the request, but the AI edit does not because the finger does not go through the nose and out the eye. (d) Both edits make the requested removals, but the AI edit makes the image sharper and adds a house in the background (which was not requested). (e) The human edit reduces the color cast but leaves behind a bluish tint and muted tones, while the AI edit successfully restores realistic colors and contrast. (f) The human edit removes the background and applies a soft photo filter that lacks stylization, while the AI edit transforms the dogs into bold, clean cartoons. More results are available at: https://huggingface.co/spaces/PSRDataset/PSR-Battle-Results