PiC: A Phrase-in-Context Dataset for Phrase Understanding and Semantic Search

Thang M. Pham, Seunghyun Yoon, Trung Bui, Anh Nguyen

Links: pdf | code | project page

Since BERT (Devlin et al., 2018), learning contextualized word embeddings has been a de-facto standard in NLP. However, the progress of learning contextualized phrase embeddings is hindered by the lack of a human-annotated, phrase-in-context benchmark. To fill this gap, we propose PiC – a dataset of ~28K of noun phrases accompanied by their contextual Wikipedia pages and a suite of three tasks of increasing difficulty for evaluating the quality of phrase embeddings. We find that training on our dataset improves ranking models’ accuracy and remarkably pushes Question Answering (QA) models to near-human accuracy which is 95% Exact Match (EM) on semantic search given a query phrase and a passage. Interestingly, we find evidence that such impressive performance is because the QA models learn to better capture the common meaning of a phrase regardless of its actual context. That is, on our Phrase Sense Disambiguation (PSD) task, SotA model accuracy drops substantially (60% EM), failing to differentiate between two different senses of the same phrase under two different contexts. Further results on our 3-task PiC benchmark reveal that learning contextualized phrase embeddings remains an interesting, open challenge.

Acknowledgment: This work is supported by Adobe Research, National Science Foundation under Grant No. 1850117 & 2145767, and donations from the NaphCare foundation.

Conference: 17th Conference of the European Chapter of the Association for Computational Linguistics (EACL 2023). Acceptance rate: 24.1% (281/1166).

⬇️ Download dataset, evaluation code, and dataset construction code: phrase-in-context.github.io

🌟 Interactive Demo 🌟https://aub.ie/phrase-search

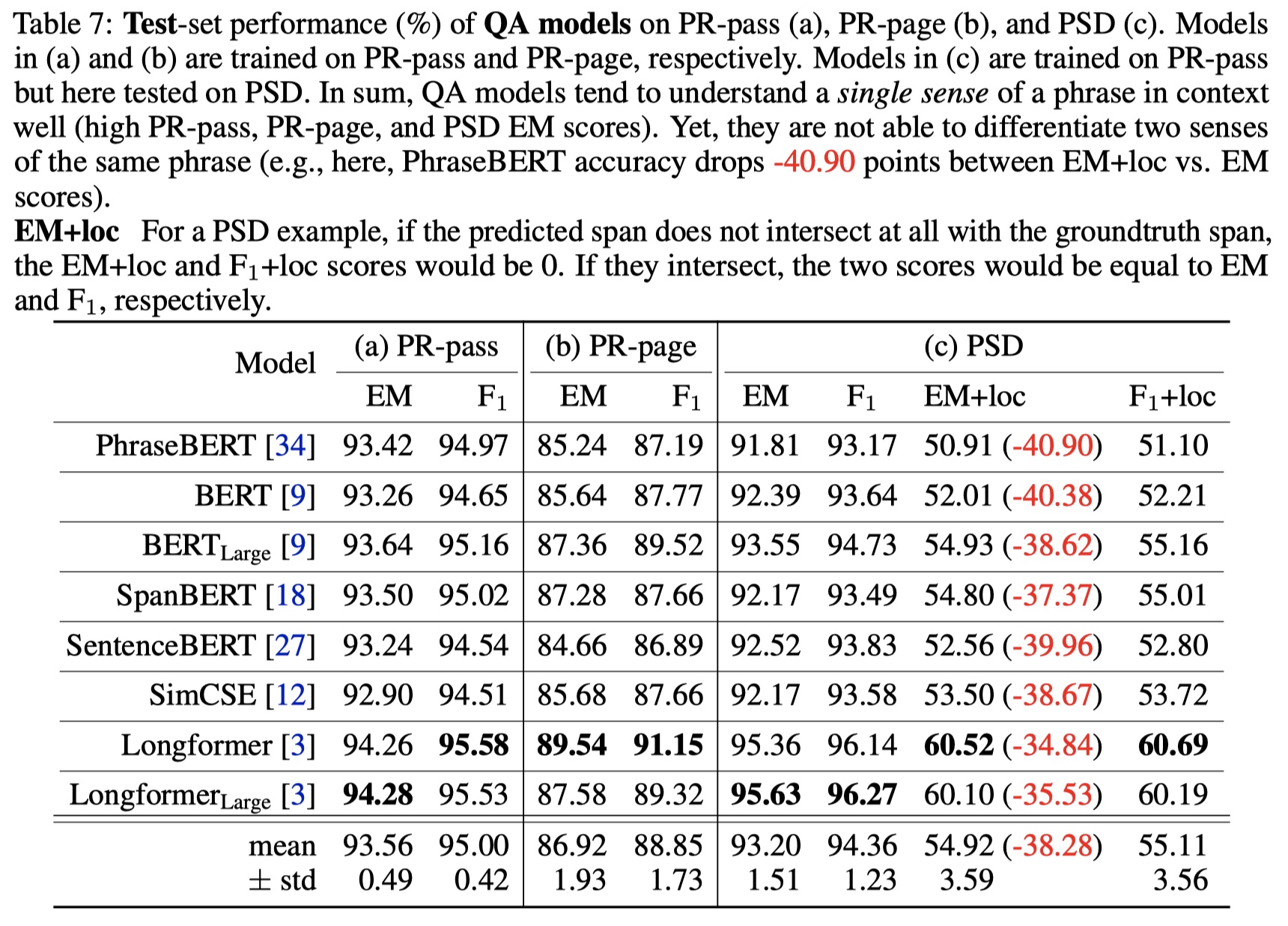

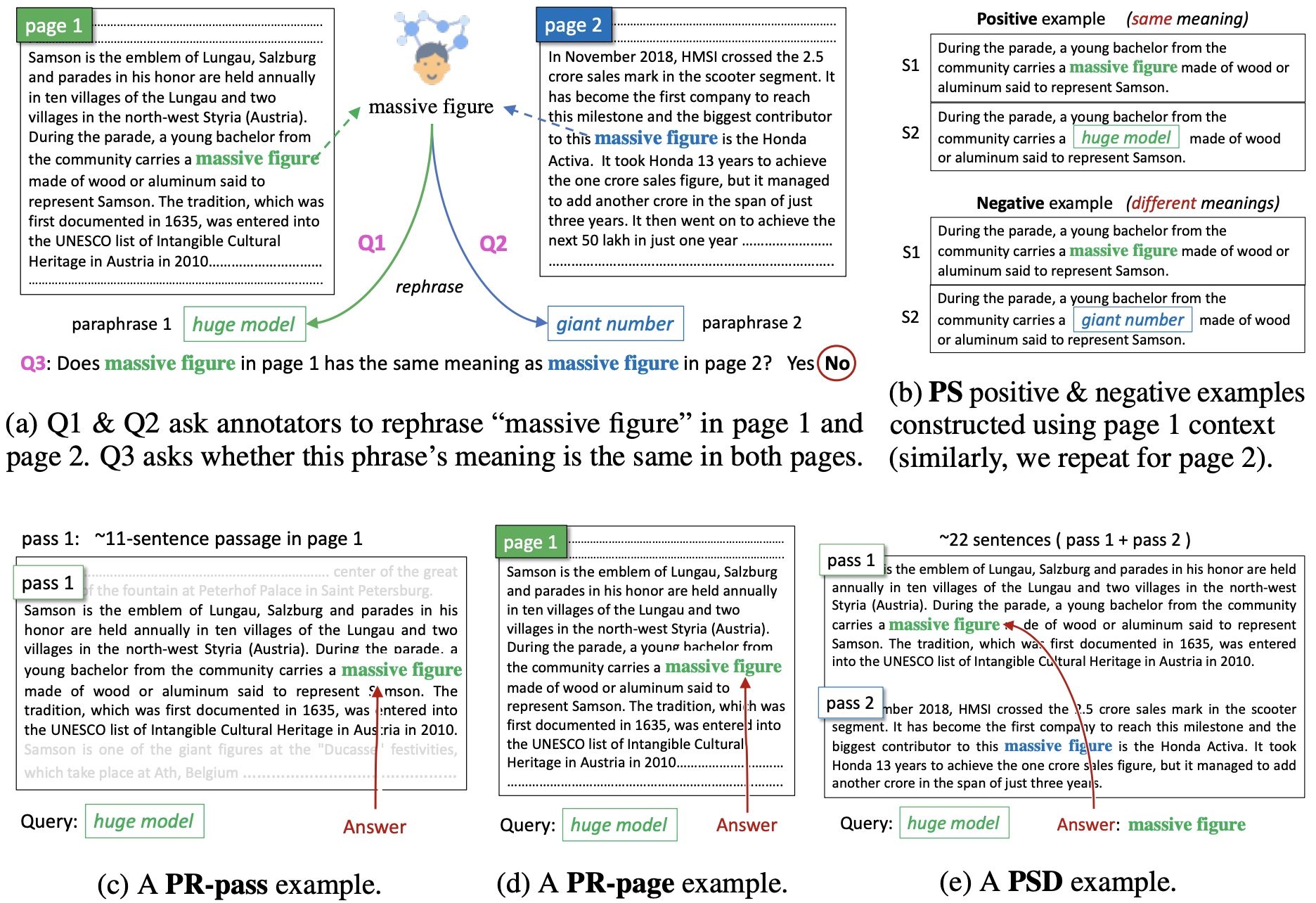

Figure 1: Given a phrase, two associated Wikipedia pages, and expert annotations, i.e. answers to Q1, Q2, and Q3 (a), we are able to construct two pairs of positive and negative examples for PS (b), a PR-pass example (c), a PR-page example (d), and a PSD example only if the answer to Q3 is No (e).

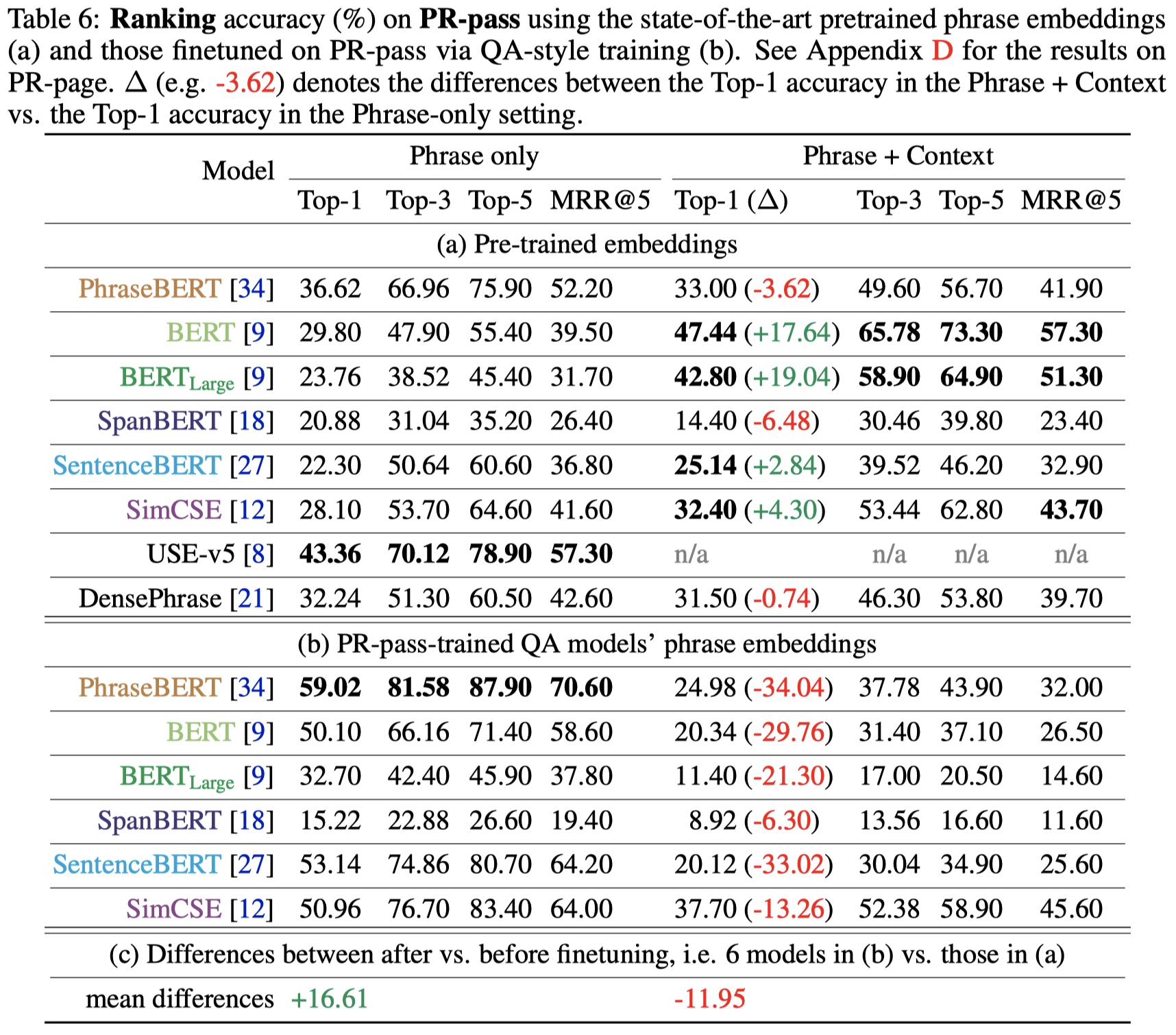

Figure 2: Some state-of-the-art BERT-based embeddings perform substantially better in phrase retrieval when context information is used in computing phrase embeddings vs. when no context words are included (a). Interestingly, after fine-tuning on PR-pass, the Question-Answering models’ embeddings perform substantially better when no context is used (+16.61 points on average). However, these finetuned BERT-based models appear to lose the capability of leveraging the context information, yielding a decrease of -11.95 points on average when the context information is used (b). In sum, QA-style training improves non-contextualized but not contextualized phrase embeddings.