Three important criteria of existing convolutional neural networks (CNNs) are (1) test-set accuracy; (2) out-of-distribution accuracy; and (3) explainability. While these criteria have been studied independently, their relationship is unknown. For example, do CNNs that have a stronger out-of-distribution performance have also stronger explainability? Furthermore, most prior feature-importance studies only evaluate methods on 2-3 common vanilla ImageNet-trained CNNs, leaving it unknown how these methods generalize to CNNs of other architectures and training algorithms. Here, we perform the first, large-scale evaluation of the relations of the three criteria using 9 feature-importance methods and 12 ImageNet-trained CNNs that are of 3 training algorithms and 5 CNN architectures. We find several important insights and recommendations for ML practitioners. First, adversarially robust CNNs have a higher explainability score on gradient-based attribution methods (but not CAM-based or perturbation-based methods). Second, AdvProp models, despite being highly accurate more than both vanilla and robust models alone, are not superior in explainability. Third, among 9 feature attribution methods tested, GradCAM and RISE are consistently the best methods. Fourth, Insertion and Deletion are biased towards vanilla and robust models respectively, due to their strong correlation with the confidence score distributions of a CNN. Fifth, we did not find a single CNN to be the best in all three criteria, which interestingly suggests that CNNs are harder to interpret as they become more accurate.

Acknowledgment: This work is supported by the National Science Foundation under Grant No. 1850117 & 2145767, and donations from the NaphCare foundation.

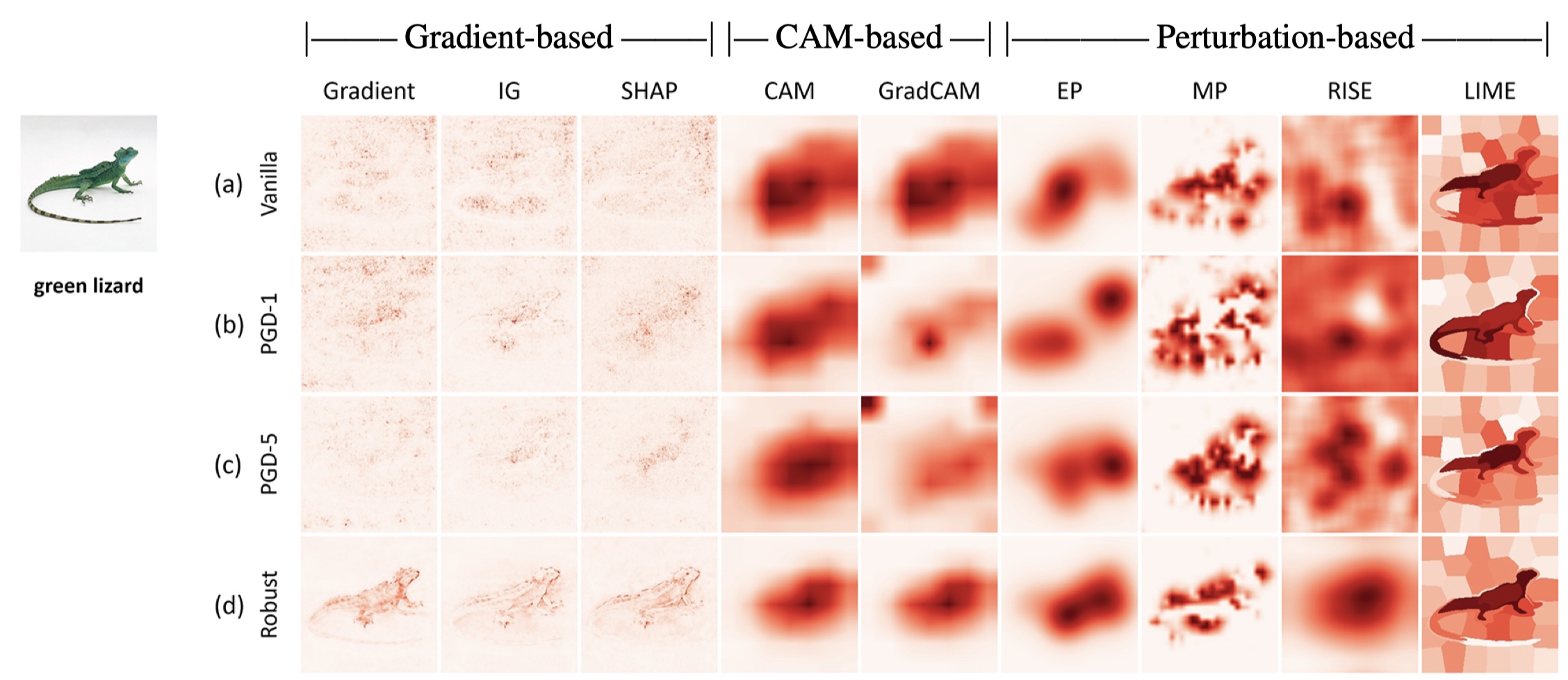

Figure 1: A comparison of attribution maps (AMs) generated by 9 AM methods for four different ResNet-50 models for the same input image and target label of “green lizard”. From top down: (a) vanilla ImageNet-trained ResNet-50; (b–c) the same architecture but trained using AdvProp where adversarial images are generated using PGD-1 and PGD-5 (i.e. 1 or 5 PGD attack steps for generating each adversarial image); and (d) a robust model trained exclusively on adversarial data via the PGD framework. As CNNs are trained on more adversarial perturbations (from top down), the AMs by gradient-based methods tend to be less noisy and more interpretable.

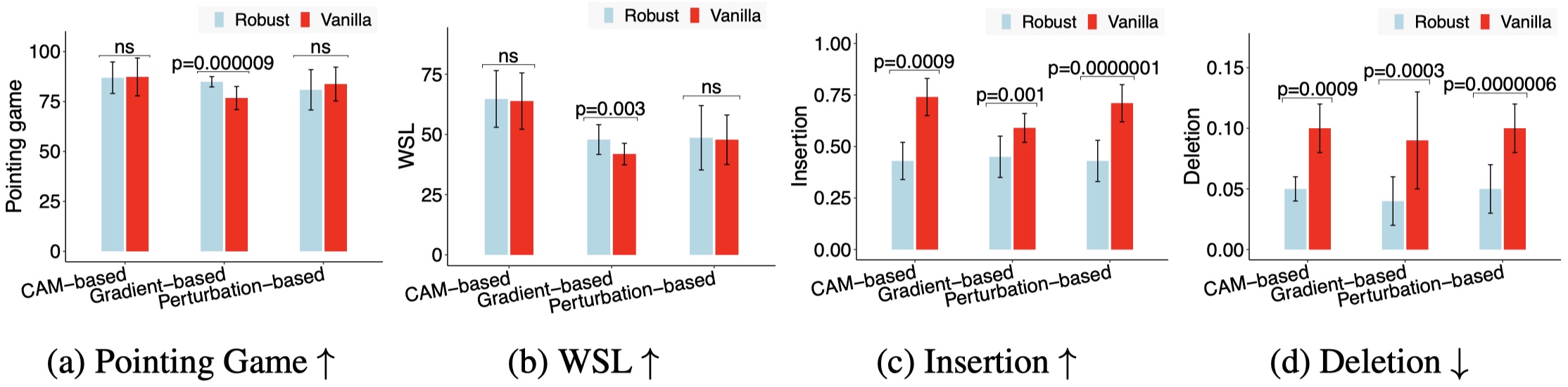

Figure 2: The AMs of robust models consistently score higher than those of vanilla models on Pointing Game (a) and weakly-supervised localization (WSL) (b). For Deletion ( ↓ lower is better), robust models consistently outperform vanilla models for all three groups of AM methods (d). In contrast, under Insertion, vanilla models always score better (c).

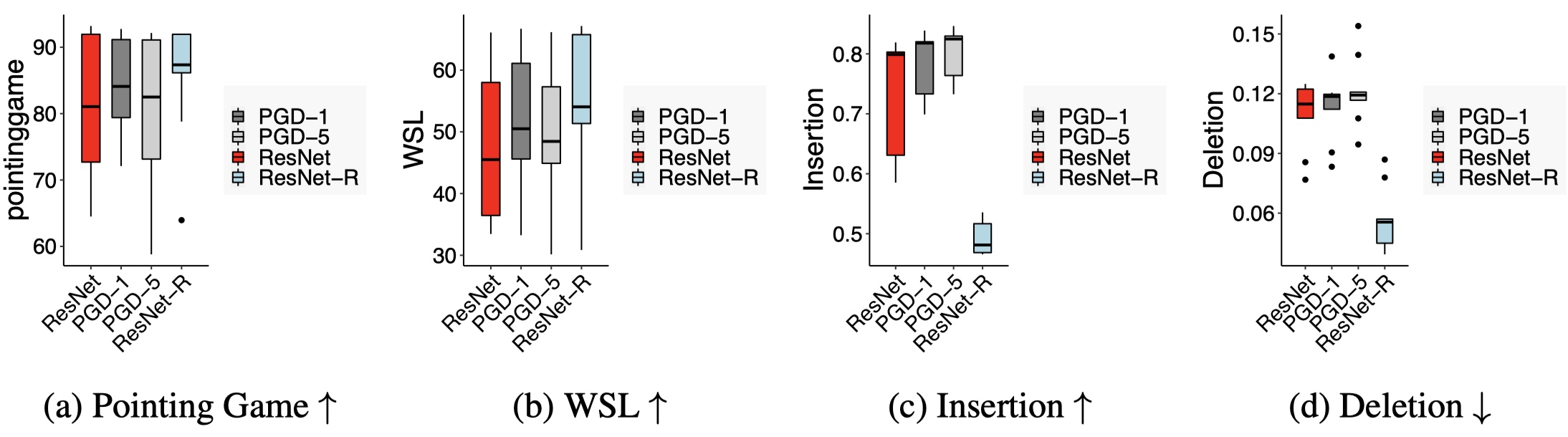

Figure 3: Averaging across 9 AM methods, AdvProp models (PGD-1 and PGD-5) outperform vanilla models (ResNet) but are worse than robust models (ResNet-R) in two object localization metrics (a & b). See Fig. 1 for qualitative results that support AdvProp localization ability. In Insertion, AdvProp models are also better than vanilla. We find the same conclusions for ImageNet images.

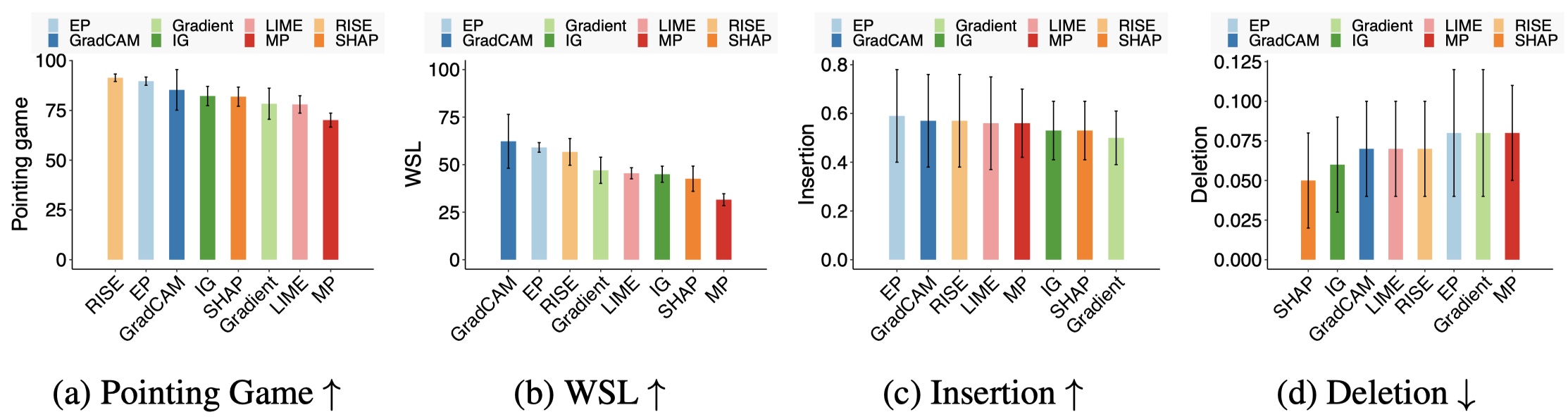

Figure 4: The average performance of 8 attribution methods across 10 CNNs show that GradCAM and RISE are among the top-3 for Pointing Game (a), WSL (b), Insertion (c), and Deletion (d) (↓ lower is better) while MP is the worst on average across all four metrics (see paper).

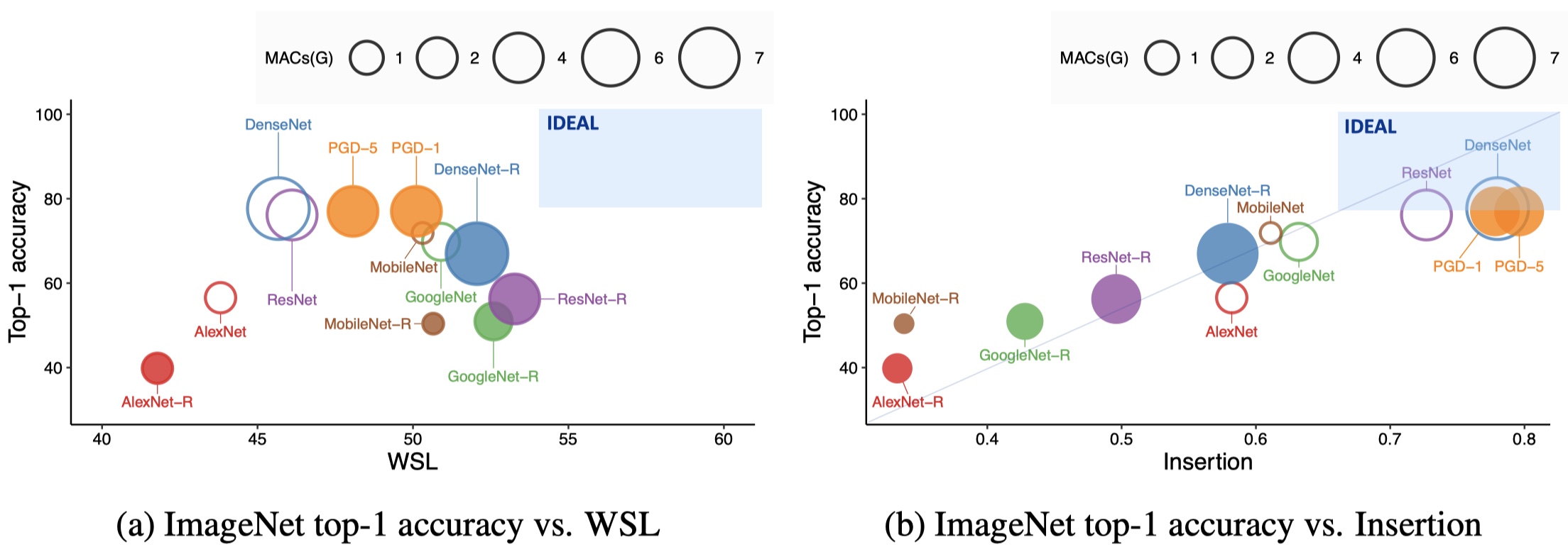

Figure 5: The average performance of all 12 CNNs across eight attribution methods under WSL and Insertion compared to ImageNet top-1 accuracy and MACs. No network is consistently the best across all metrics and criteria. Fig. A2 shows the results for the Pointing Game and Deletion metrics. The IDEAL region is where ideal CNNs of the highest classification and explanation capabilities are.