Plug & Play Generative Networks: Conditional Iterative Generation of Images in Latent Space

Anh Nguyen, Jeff Clune, Yoshua Bengio, Alexey Dosovitskiy, Jason Yosinski

Links: pdf | code | project page

Generating high-resolution, photo-realistic images has been a long-standing goal in machine learning. Recently, Nguyen et al. (2016) showed one interesting way to synthesize novel images by performing gradient ascent in the latent space of a generator network to maximize the activations of one or multiple neurons in a separate classifier network. In this paper we extend this method by introducing an additional prior on the latent code, improving both sample quality and sample diversity, leading to a state-of-the-art generative model that produces high quality images at higher resolutions (227×227) than previous generative models, and does so for all 1000 ImageNet categories. In addition, we provide a unified probabilistic interpretation of related activation maximization methods and call the general class of models “Plug and Play Generative Networks”. PPGNs are composed of (1) a generator network G that is capable of drawing a wide range of image types and (2) a replaceable “condition” network C that tells the generator what to draw. We demonstrate the generation of images conditioned on a class (when C is an ImageNet or MIT Places classification network) and also conditioned on a caption (when C is an image captioning network). Our method also improves the state of the art of Multifaceted Feature Visualization, which generates the set of synthetic inputs that activate a neuron in order to better understand how deep neural networks operate. Finally, we show that our model performs reasonably well at the task of image inpainting. While image models are used in this paper, the approach is modality-agnostic and can be applied to many types of data.

Conference: CVPR 2017. Spotlight Oral presentation (8% acceptance rate) — see video.

Download: CVPR poster

Press coverage:

- Nature. Astronomers explore uses for AI-generated images

- Science. How AI detectives are cracking open the black box of deep learning

- The Verge. Artificial intelligence is going to make it easier than ever to fake images and video

4-min Spotlight oral presentation at CVPR 2017

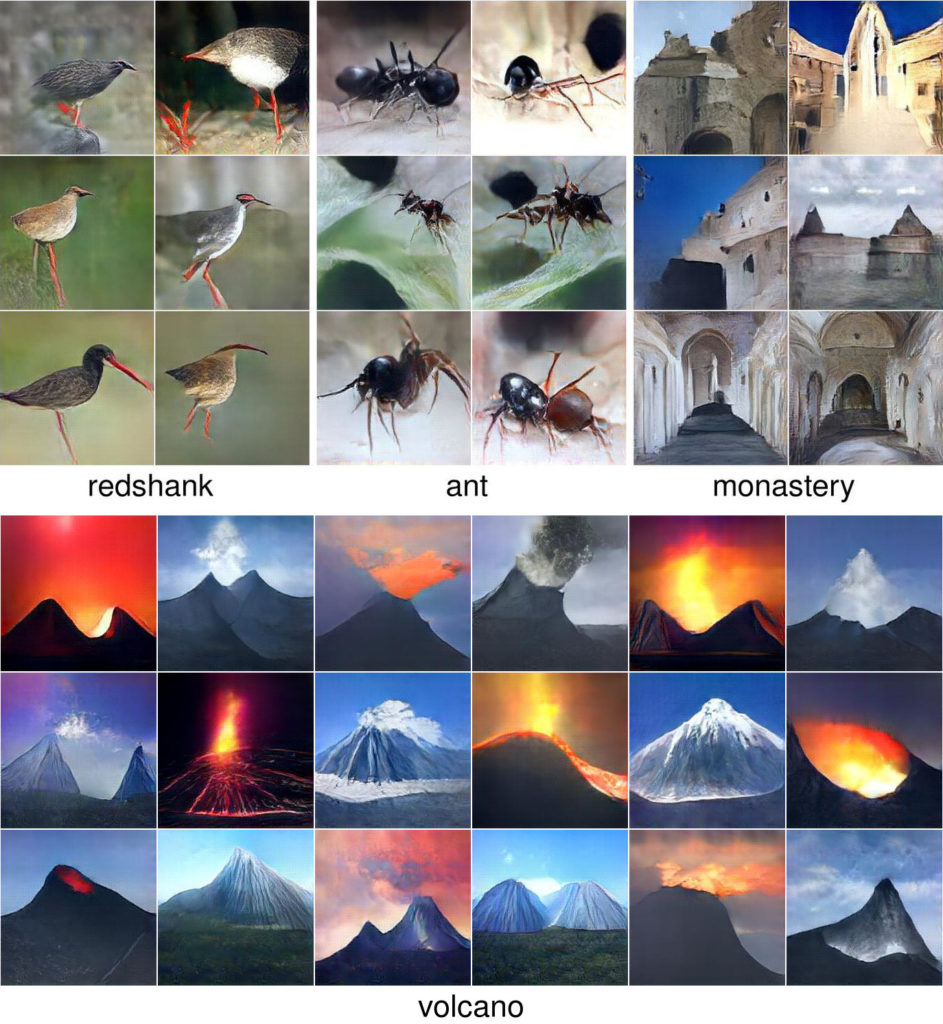

Figure 1: Images synthetically generated by Plug and Play Generative Networks at high-resolution (227×227) for four ImageNet classes. Not only are many images nearly photo-realistic, but samples within a class are diverse. An example t-SNE visualization of 400 samples generated for volcano class is here.

Videos of sampling chains (with one sample per frame; no samples filtered out) from PPGN model between 10 different classes and within single classes (Triumph Arch and Junco).

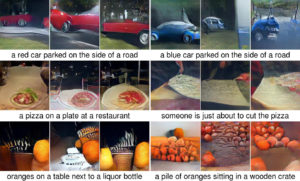

Figure 2: Images synthesized to match a user text description. A PPGN containing the image captioning model from LRCN model by Donahue et al. (2015) can generate reasonable images that differ based on user-provided captions (e.g. red car vs. blue car, oranges vs. a pile of oranges). For each caption, we show 3 images synthesized starting from random initializations.

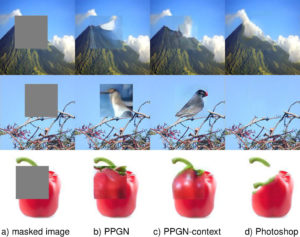

Figure 3: We perform class-conditional image sampling to fill in 100×100 missing pixels in a real 227×227 image. While PPGN only conditions on a specific class (top to bottom: volcano, junco, and bell pepper), PPGN-context also constrains the code to produce an image that matches the context region. PPGN-context (c) matches the pixels surrounding the masked region better than PPGN (b), and semantically fills in better than the Context-Aware Fill feature in Photoshop (d) in many cases. The result shows that the class-conditional PPGN does understand the semantics of images. More PPGN-context results are in the paper.

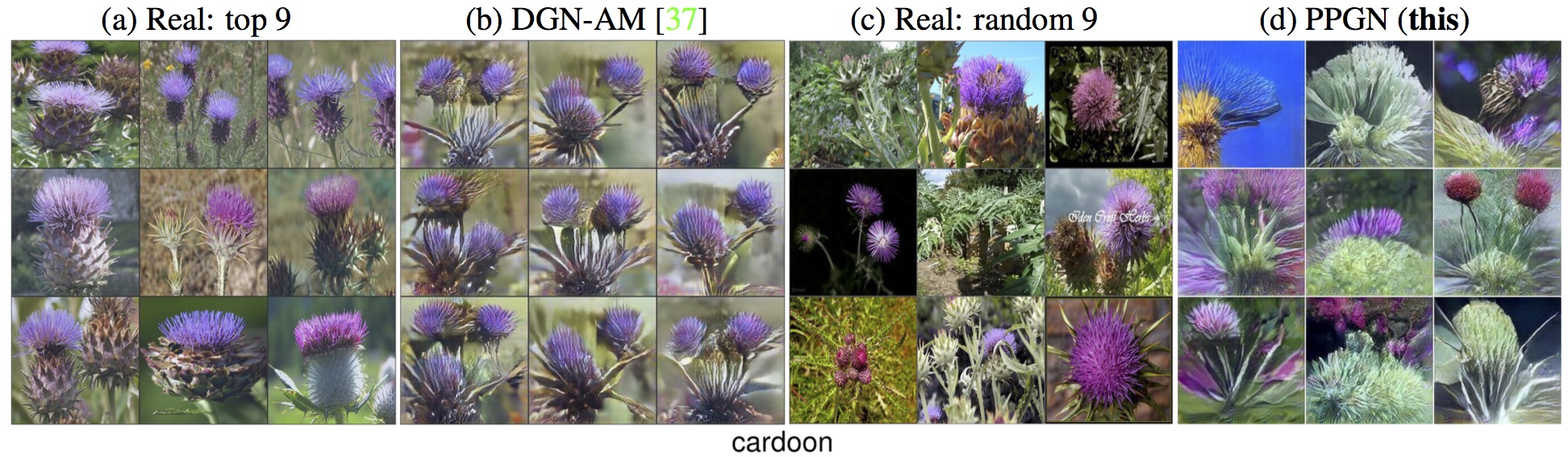

Figure 4: For the “cardoon” class neuron in a pre-trained ImageNet classifier, we show: a) the 9 real training set images that most highly activate that neuron; b) images synthesized by DGN-AM, which are of similar type and diversity to the real top-9 images; c) random real training set images in the cardoon class; and d) images synthesized by PPGN, which better represent the diversity of random images from the class. PPGNs produce larger image diversity than DGN-AM, which if viewed as a generative model often only captures only the highest activating mode of the data distribution.