VectorDefense: Vectorization as a Defense to Adversarial Examples

Vishaal M. Kabilan, Brandon Morris, Anh Nguyen

Links: pdf | code | project page

Training deep neural networks on images represented as grids of pixels has brought to light an interesting phenomenon known as adversarial examples. Inspired by how humans reconstruct abstract concepts, we attempt to codify the input bitmap image into a set of compact, interpretable elements to avoid being fooled by the adversarial structures. We take the first step in this direction by experimenting with image vectorization as an input transformation step to map the adversarial examples back into the natural manifold of MNIST handwritten digits. On MNIST, our simple method surprisingly is effective in removing the minute imperceptible adversarial changes and pulls the input image back to the true manifold.

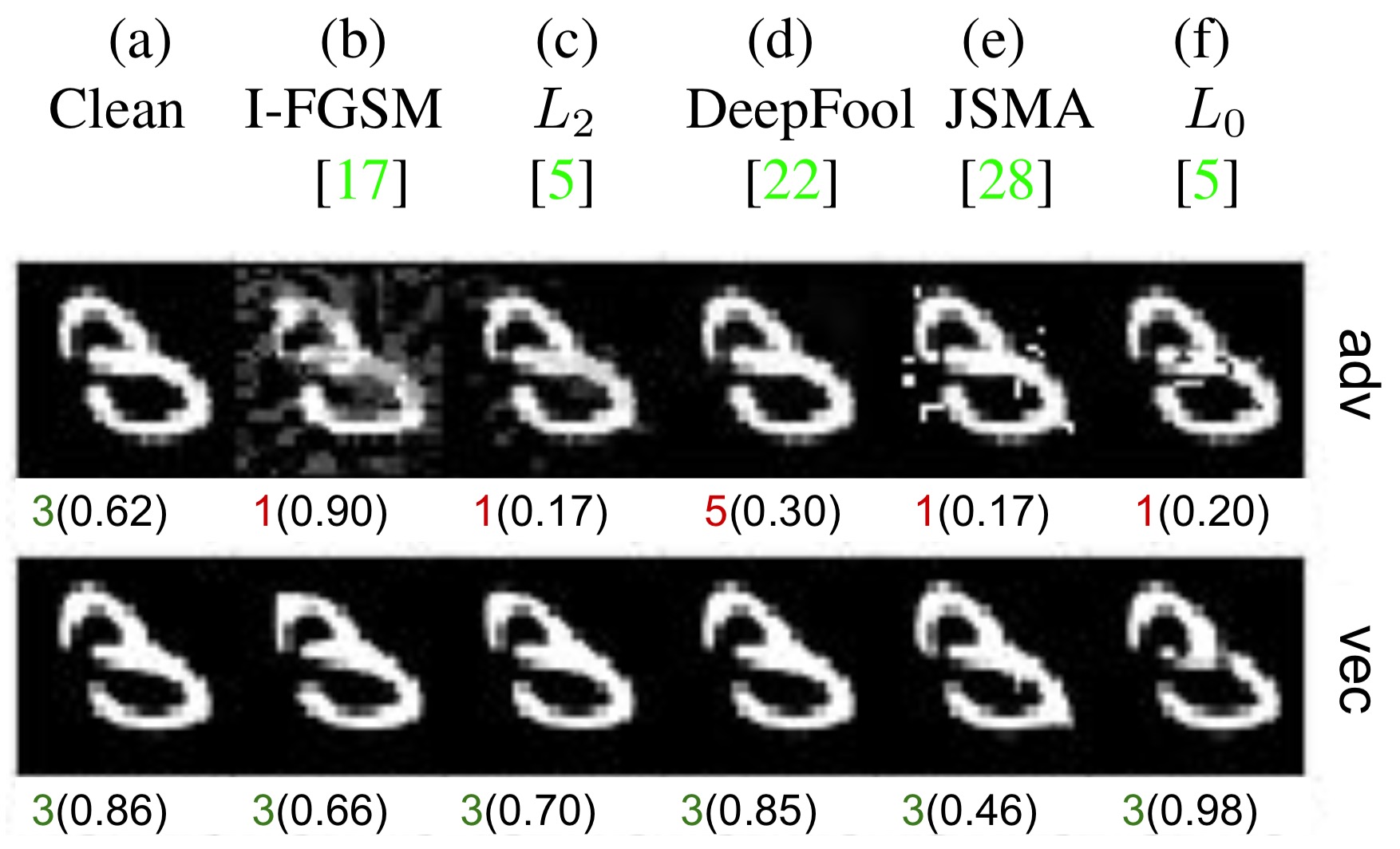

Figure 1: The top row shows the adversarial examples (b-f) crafted for a real test image, here “3” (a) via state-of-the-art crafting methods (top labels). The bottom row shows the results of vectorizing the respective images in the row above. Below each image is the predicted label and confidence score from the classifier. All the attacks are targeted to label “1” except for DeepFool (which is an untargeted attack). Vectorization substantially washes out the adversarial artifacts and pulls the images back to the correct labels. See the paper for more examples.

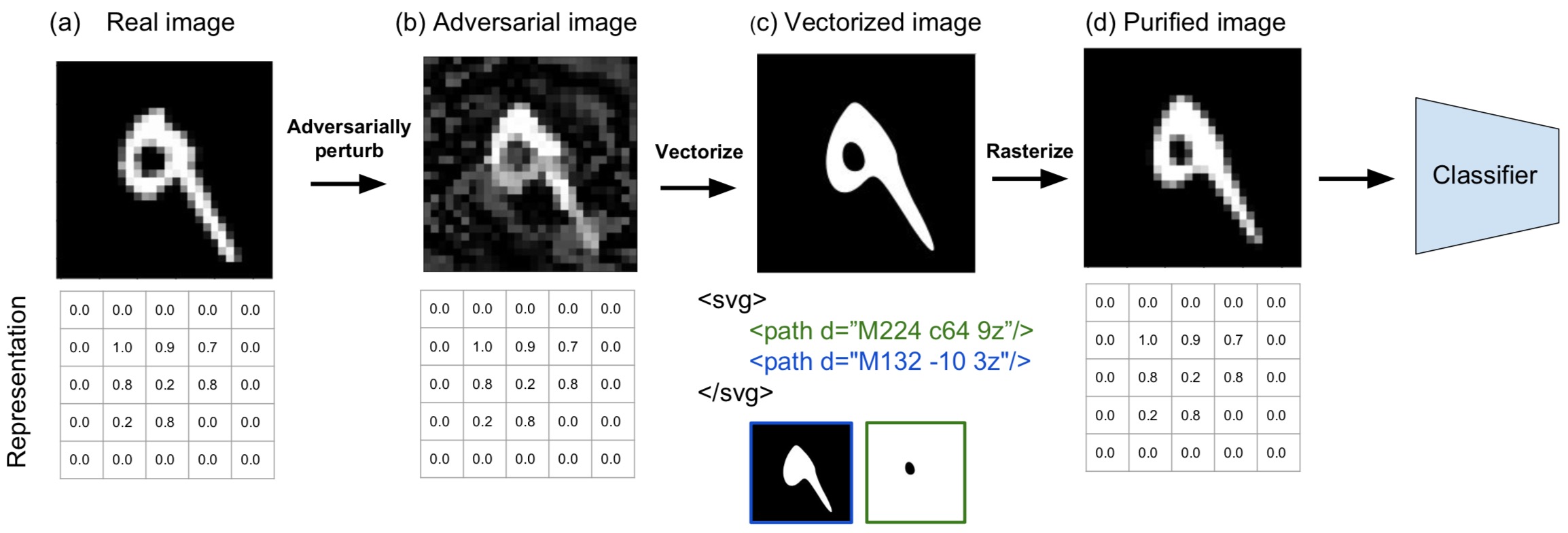

Figure 2: Given a real image in the bitmap space (a), an attacker crafts an adversarial example (b). Via vectorization (i.e. image tracing), we transform the input image (b) into a vector graphic (c) in SVG format, which is an XML tree with geometric primitives such as contours and ovals. The vector graphic is then rasterized back to bitmap (d) before being fed to a classifier for prediction. VectorDefense effectively washes out the adversarial artifacts and pulls the perturbed image (b) back closer to the natural manifold (d).

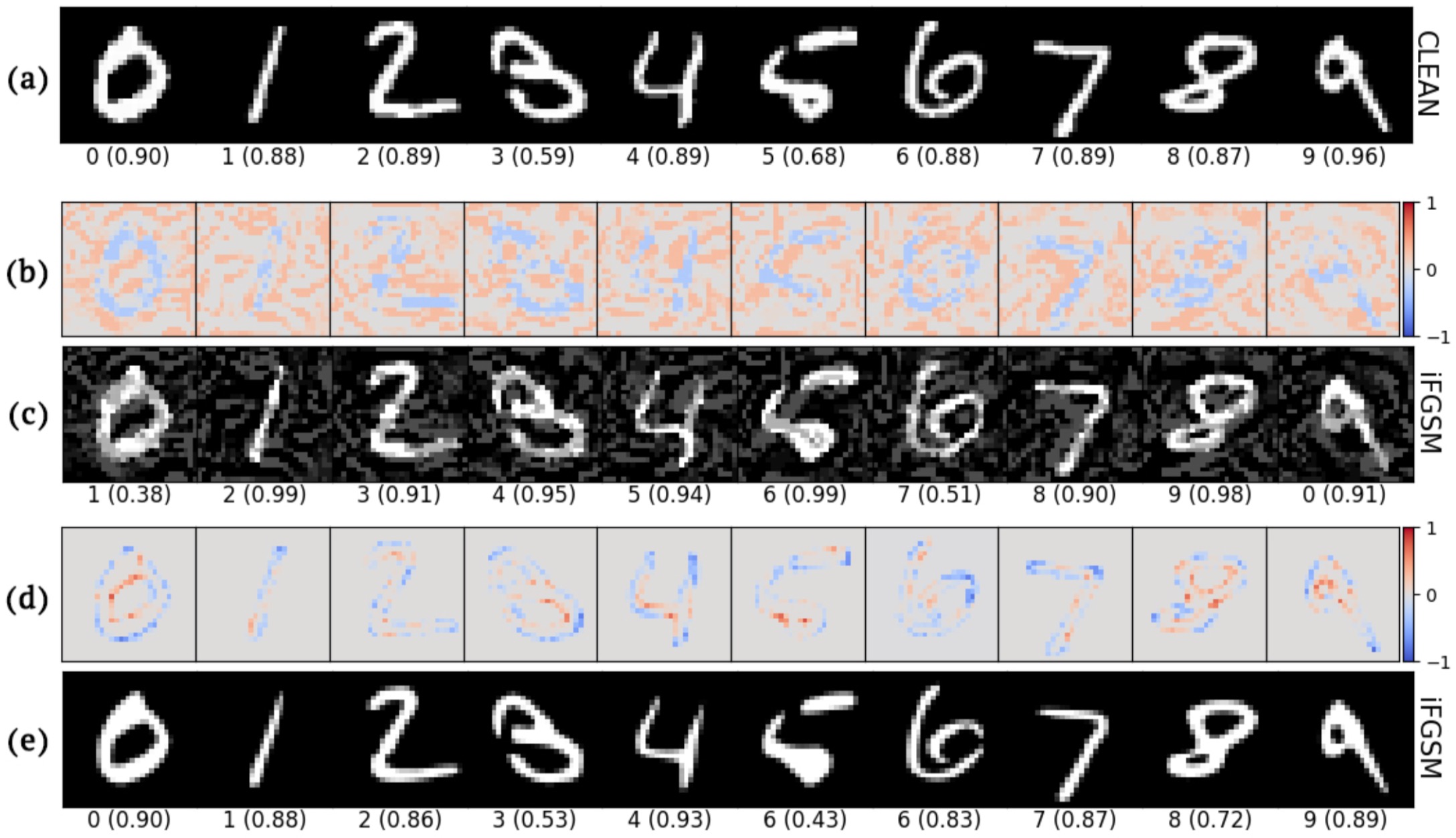

Figure 3: Given clean MNIST images (a) which are correctly classified with a label $l$ (here, 0–9 respectively), we add perturbations (b) to produce adversarial examples (c) that are misclassified as $l + 1$ (i.e. digit 0 is misclassified as 1, etc.) via I-FGSM [17]. VectorDefense effectively purifies the adversarial perturbations in the final results (e), which are correctly classified. Row (d) shows the difference between the original (a) vs. purified examples (e). Below each image is its predicted label and confidence score.