Despite excellent performance on stationary test sets, deep neural networks (DNNs) can fail to generalize to out-of-distribution (OoD) inputs, including natural, non-adversarial ones, which are common in real-world settings. In this paper, we present a framework for discovering DNN failures that harnesses 3D renderers and 3D models. That is, we estimate the parameters of a 3D renderer that cause a target DNN to misbehave in response to the rendered image. Using our framework and a self-assembled dataset of 3D objects, we investigate the vulnerability of DNNs to OoD poses of well-known objects in ImageNet. For objects that are readily recognized by DNNs in their canonical poses, DNNs incorrectly classify 97% of their poses. In addition, DNNs are highly sensitive to slight pose perturbations (e.g. 8 degree in rotation). Importantly, adversarial poses transfer across models and datasets. We find that 99.9% and 99.4% of the poses misclassified by Inception-v3 also transfer to the AlexNet and ResNet-50 image classifiers trained on the same ImageNet dataset, respectively, and 75.5% transfer to the YOLO-v3 object detector trained on MS COCO.

Conference: CVPR 2019 (acceptance rate: 25.2%).

Acknowledgment: This material is based upon work supported by the National Science Foundation under Grant No. 1850117 and a donation from Adobe Inc.

Press coverage:

-

- Nature. Why deep-learning AIs are so easy to fool

- ZDNet. Google’s image recognition AI fooled by new tricks

- Nautilus. Why Robot Brains Need Symbols

- Gizmodo. Google’s ‘Inception’ Neural Network Tricked By Images Resembling Bad Video Games

- New Scientist. The best image-recognition AIs are fooled by slightly rotated images (pdf)

- Gary Marcus. The deepest problem with deep learning

- Communications of ACM. March 2019 news.

- Manifold.ai. We need to build interactive computer vision systems

- Medium. AI is about to get bigger, better, and more boring

- Adobe. Neural Networks Easily Fooled by Common Objects Seen from New Angles

- Gizmodo. Thousands of Reasons That We Shouldn’t Trust a Neural Network to Analyze Images

- Binary District Journal. AI Applications and “Black Boxes”

- Facebook AI blog. Building AI that can understand variation in the world around us

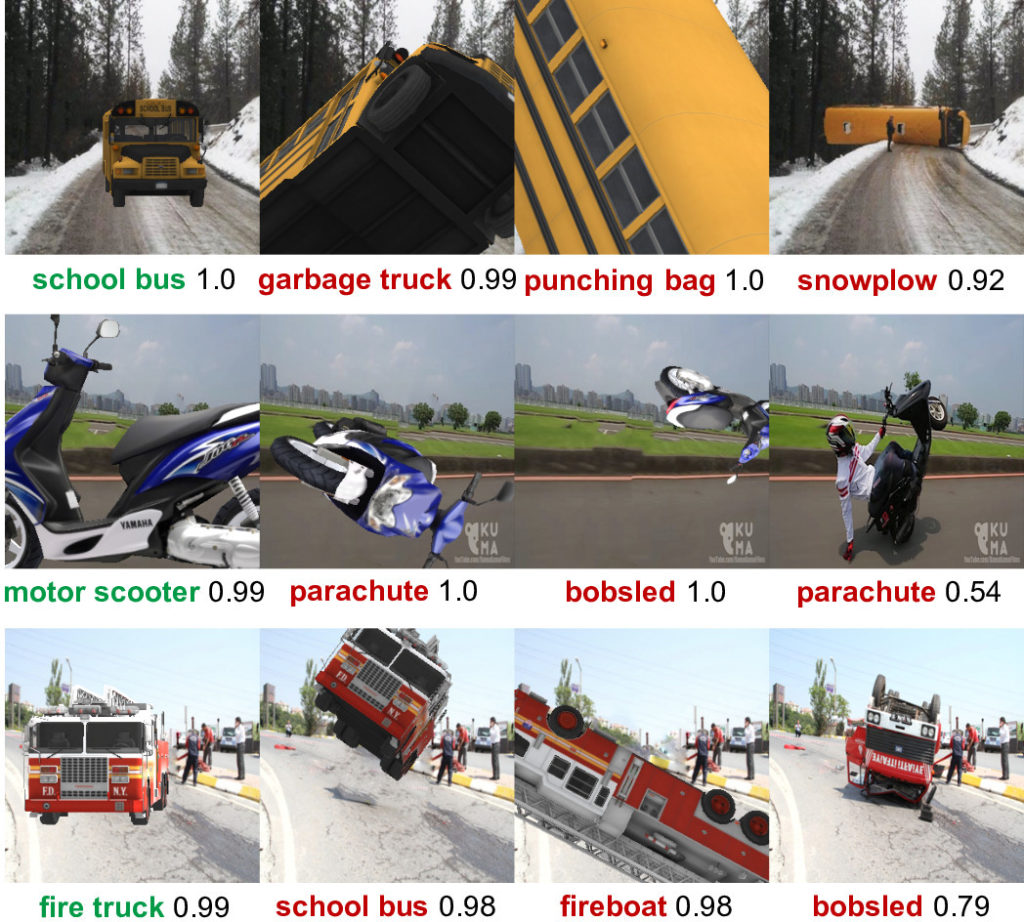

Figure 1: The Google Inception-v3 classifier correctly labels the canonical poses of objects (a), but fails to recognize out-of-distribution images of objects in unusual poses (b–d), including real photographs retrieved from the Internet (d). The left 3×3 images (a–c) are found by our framework and rendered via a 3D renderer. Below each image are its top-1 predicted label and confidence score.

Figure 2: 30 random adversarial examples misclassified by Inception-v3 with high confidence (p >= 0.9) generated from 3 objects: ambulance, taxi, and cell phone.

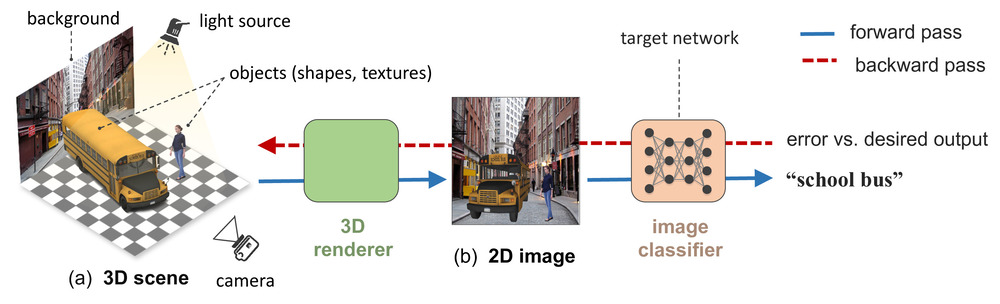

Figure 3: To test a target DNN, we build a 3D scene (a) that consists of 3D objects (here, a school bus and a pedestrian), lighting, a background scene, and camera parameters. Our 3D renderer renders the scene into a 2D image, which the image classifier labels “school bus”. We can estimate the pose changes of the school bus that cause the classifier to misclassify by (1) approximating gradients via finite differences; or (2) backpropagating (dashed line) through a differentiable renderer.

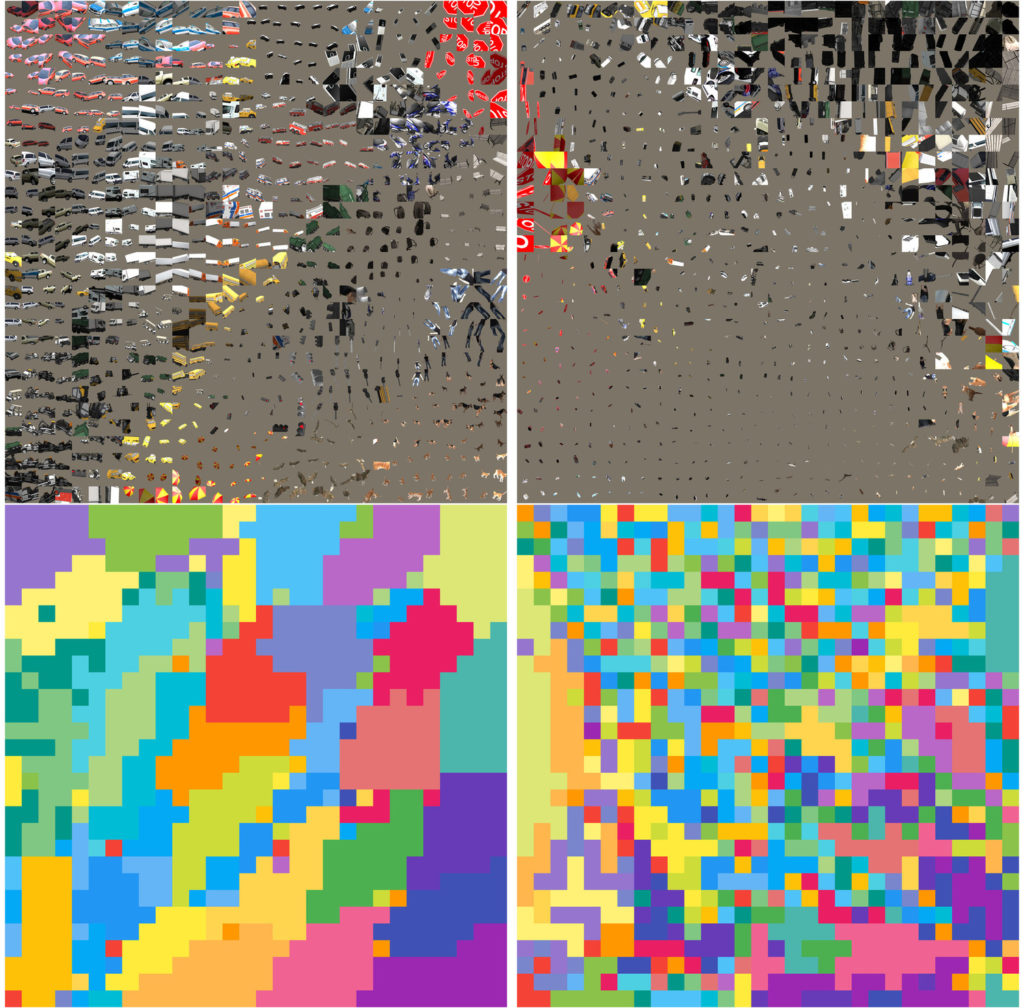

Figure 4: Comparison of the t-SNE embeddings of correctly classified vs misclassified poses in the AlexNet fc7 feature space. Correctly classified poses are similar while incorrect ones are diverse across different objects and renderer parameters.

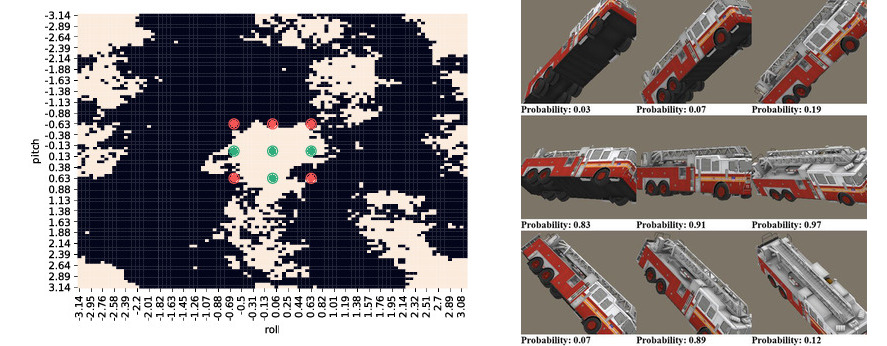

Figure 5: Inception-v3’s ability to correctly classify images is highly localized in the rotation and translation parameter space.

LEFT: the classification landscape for a firetruck object when altering pitch and roll while holding other parameters constant (white: correct classifications; black: misclassifications).

Green and red circles indicate correct and incorrect classifications, respectively, corresponding to the fire truck object poses found in the RIGHT panel.

Figure 6: We release a simple GUI tool that allows users to load a 3D object, a background image, and transform objects + lighting and watch DNN responses!

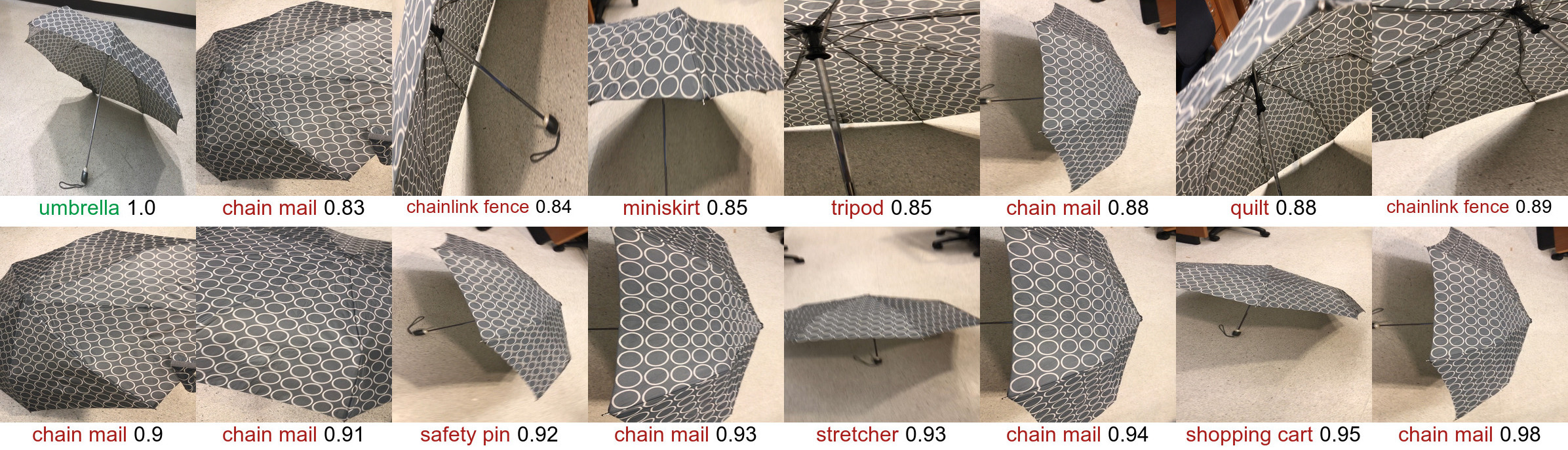

Figure 7: Real-world, high-confidence adversarial poses can be found by simply taking photos from strange angles of a familiar object, here, an umbrella. While Inception-v3 can correctly predict the object in canonical poses (the top-left image in each panel), the model misclassified the same objects in unusual poses. Below each image is its top-1 prediction label and confidence score.

Figure 8: Real-world, high-confidence adversarial poses can be found by simply taking photos from strange angles of a familiar object, here, a stop sign.