Out of Order: How important is the sequential order of words in a sentence in Natural Language Understanding tasks?

Thang M. Pham, Trung Bui, Long Mai, Anh Nguyen

Links: pdf | code | project page

Do state-of-the-art natural language understanding models care about word order – one of the most important characteristics of a sequence? Not always! We found 75% to 90% of the correct predictions of BERT-based classifiers, trained on many GLUE tasks, remain constant after input words are randomly shuffled. Despite BERT embeddings are famously contextual, the contribution of each individual word to downstream tasks is almost unchanged even after the word’s context is shuffled. BERT-based models are able to exploit superficial cues (e.g. the sentiment of keywords in sentiment analysis; or the word-wise similarity between sequence-pair inputs in natural language inference) to make correct decisions when tokens are arranged in random orders. Encouraging classifiers to capture word order information improves the performance on most GLUE tasks, SQuAD 2.0 and out-of-samples. Our work suggests that many GLUE tasks are not challenging machines to understand the meaning of a sentence.

Acknowledgment: This work is supported by the National Science Foundation under Grant No. 1850117, and the NaphCare Charitable Foundation.

Conference: Findings of ACL at ACL 2021 (acceptance rate: 36.2% of 3350 submissions)

Press coverage:

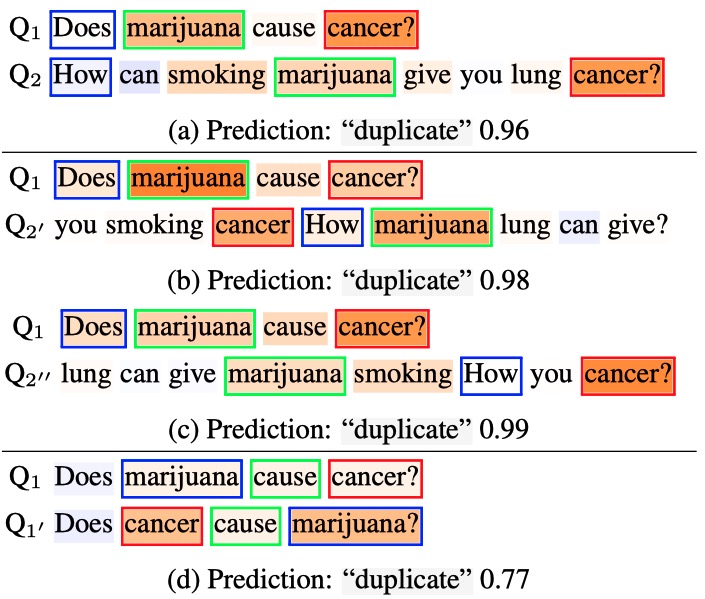

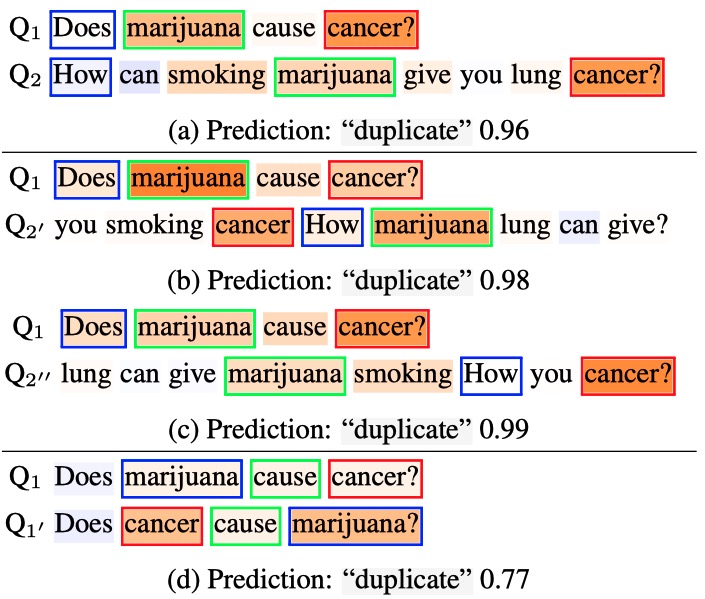

Figure 1: A RoBERTa-based model achieving a 91.12% accuracy on QQP, here, correctly labeled a pair of Quora questions “duplicate” (a). Interestingly, the predictions remain unchanged when all words in question Q$_2$ is randomly shuffled (b–c). QQP models also often incorrectly label a real sentence and its shuffled version to be “duplicate” (d). We found evidence that GLUE models rely heavily on words to make decisions e.g. here, “marijuana” and “cancer” (more important words are highlighted by LIME). Also, there exist self-attention matrices tasked explicitly with extracting word-correspondence between two input sentences regardless of the order of those words. Here, the top-3 pairs of words assigned the highest self-attention weights at (layer 0, head 7) are inside red, green, and blue rectangles, respectively.

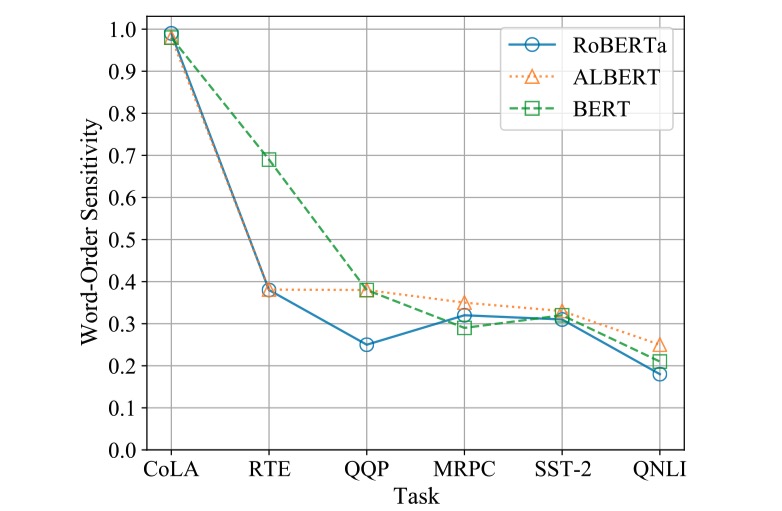

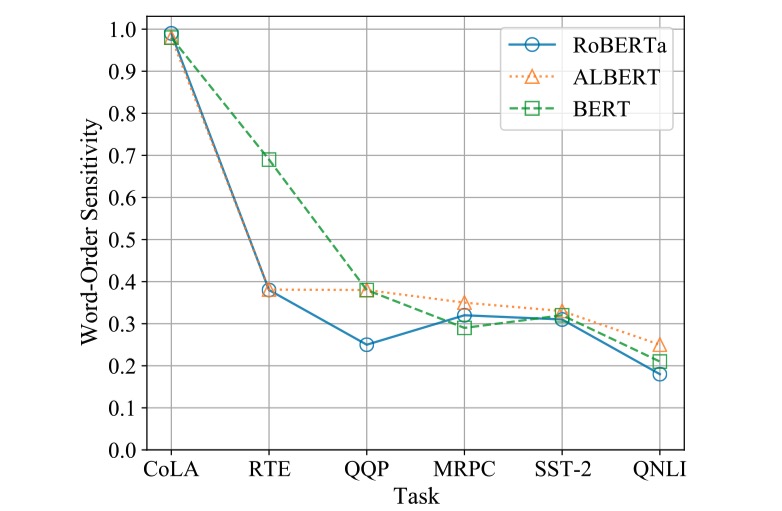

Figure 2: Across three BERT variants (RoBERTa, ALBERT, and BERT), CoLA models are almost always sensitive to word orders (average WOS score of 0.99) and are at least 2 times more sensitive to 1-gram shuffling than models from the other tasks. The trends remain consistent for 2-gram & 3-gram experiments.

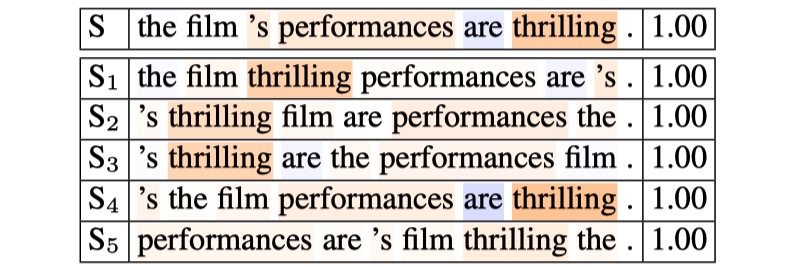

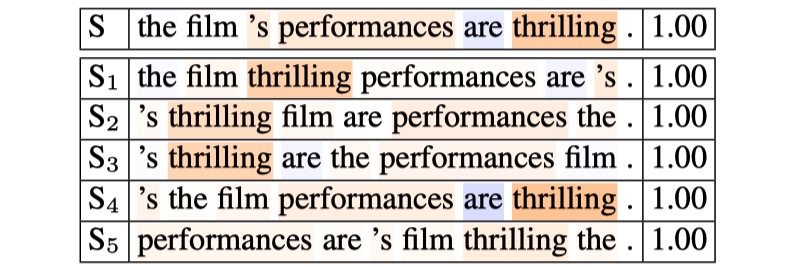

Figure 3: An original SST-2 dev-set example (S) and its five shuffled versions (S$_1$ to S$_5$) were all correctly labeled “positive” by a state-of-the-art RoBERTa-based classifier with certainty confidence scores (right column). We found ~60% of the sentence-level SST-2 labels can be predicted correctly using the polarity of a single most-important word (e.g. “thrilling” correlates with a positive movie review).

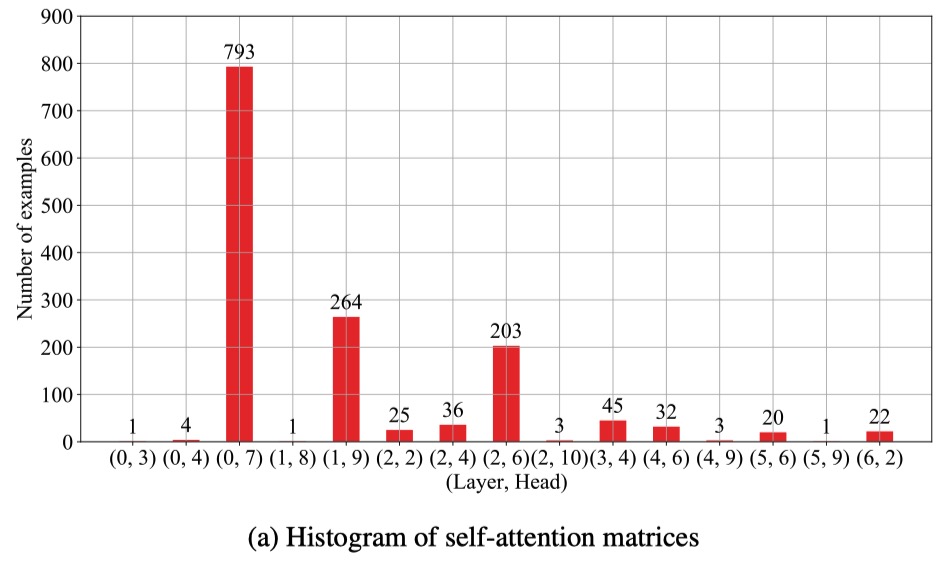

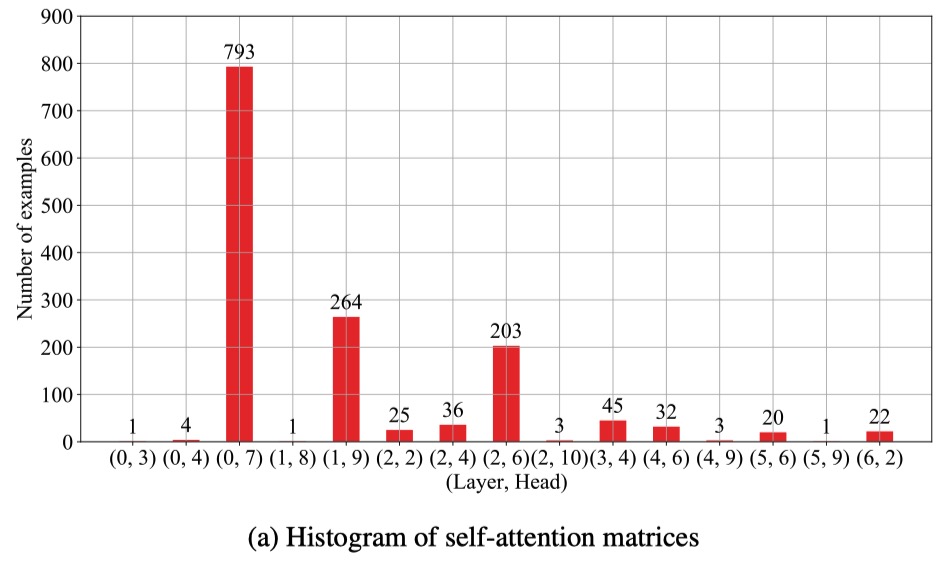

Figure 4: Among 144 self-attention matrices in the RoBERTa-based classifier finetuned for QNLI, there are 15 “word-matching” matrices (a) that explicitly attend to duplicate words that appear in both questions and answers regardless of the order of words in the question. That is, each of those matrices puts the highest weights to three pairs of words (see example pairs in Fig. 6) compared to other weights that connect question tokens to answer tokens. For each QNLI example, we identified one such matrix that exhibit the matching behavior the strongest (a). 92% of the task of attending to duplicate words is mostly handled in the first three layers.

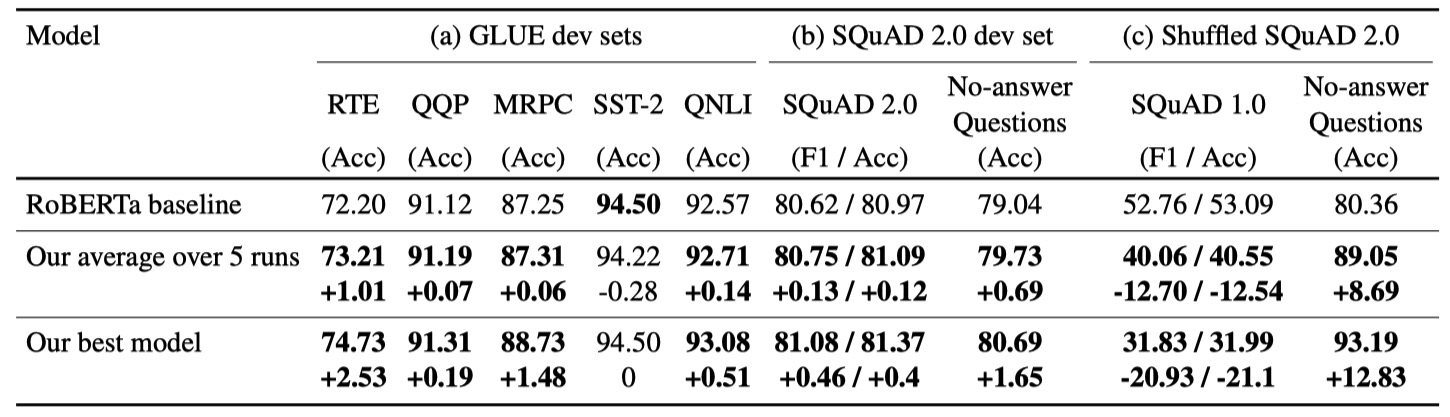

Table 1: Additionally, finetuning the pretrained RoBERTa on synthetic tasks (before finetuning on the downstream tasks) improved model dev-set performance on all the tested tasks in GLUE (except for SST-2), SQuAD 2.0 (b), and out-of-samples (c). Shuffled SQuAD 2.0 is the same as SQuAD 2.0 except that words in each question are randomly shuffled.