A cost-effective method for improving and re-purposing large, pre-trained GANs by fine-tuning their class-embeddings

Qi Li, Long Mai, Michael A. Alcorn, Anh Nguyen

Links: pdf | code | project page

Large, pre-trained generative models have been increasingly popular and useful to both the research and wider communities. Specifically, BigGANs a class-conditional Generative Adversarial Networks trained on ImageNet—achieved excellent, state-of-the-art capability in generating realistic photos. However, fine-tuning or training BigGANs from scratch is practically impossible for most researchers and engineers because (1) GAN training is often unstable and suffering from mode-collapse; and (2) the training requires a significant amount of computation, 256 Google TPUs for 2 days or 8xV100 GPUs for 15 days. Importantly, many pre-trained generative models both in NLP and image domains were found to contain biases that are harmful to society. Thus, we need computationally-feasible methods for modifying and re-purposing these huge, pre-trained models for downstream tasks. In this paper, we propose a cost-effective optimization method for improving and re-purposing BigGANs by fine-tuning only the class-embedding layer. We show the effectiveness of our model-editing approach in three tasks: (1) significantly improving the realism and diversity of samples of complete mode-collapse classes; (2) re-purposing ImageNet BigGANs for generating images for Places365; and (3) de-biasing or improving the sample diversity for selected ImageNet classes.

Conference: ACCV 2020. Oral presentation (acceptance rate: 63/768 = ~8%). Huawei Best Application Paper Honorable Mention (acceptance rate: 6/768 = ~0.8%)

Acknowledgment: This work is supported by the National Science Foundation under Grant No. 1850117 and a donation from Adobe Inc.

5-min summary video

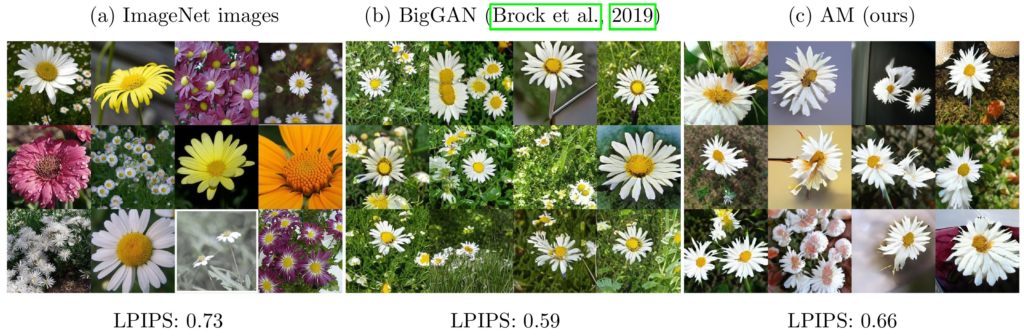

Figure 1: $256\times 256$ BigGAN samples for some classes, here, daisy (middle) are far less diverse than the real data (left). By updating only the class embeddings of BigGAN, our AM method (right) substantially improved the diversity, here reducing the LPIPS diversity gap by 50%. This result interestingly shows that the BigGAN generator itself was already capable of synthesizing such diverse images but the original embeddings limited the diversity.

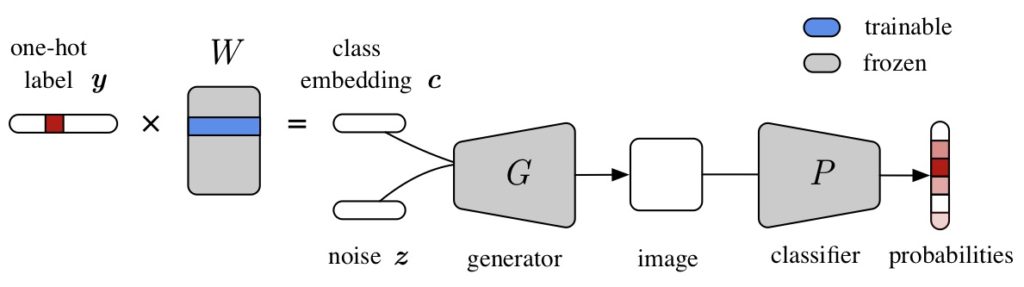

Figure 2: To improve the samples for a target class represented by a one-hot vector $y$, we iteratively take steps to find an embedding $c$ (\ie a row in the embedding matrix $W$) such that all the generated images $\{G(c, z^i)\}$, for different random noise vectors $z^i \sim N(0, I)$, would be (1) classified as the target class $y$; and (2) diverse \ie yielding different softmax probability distributions. We backpropagate through both the frozen, pre-trained generator $G$ and classifier $P$ and perform gradient descent over batches of random latent vectors $\{z^i\}$.

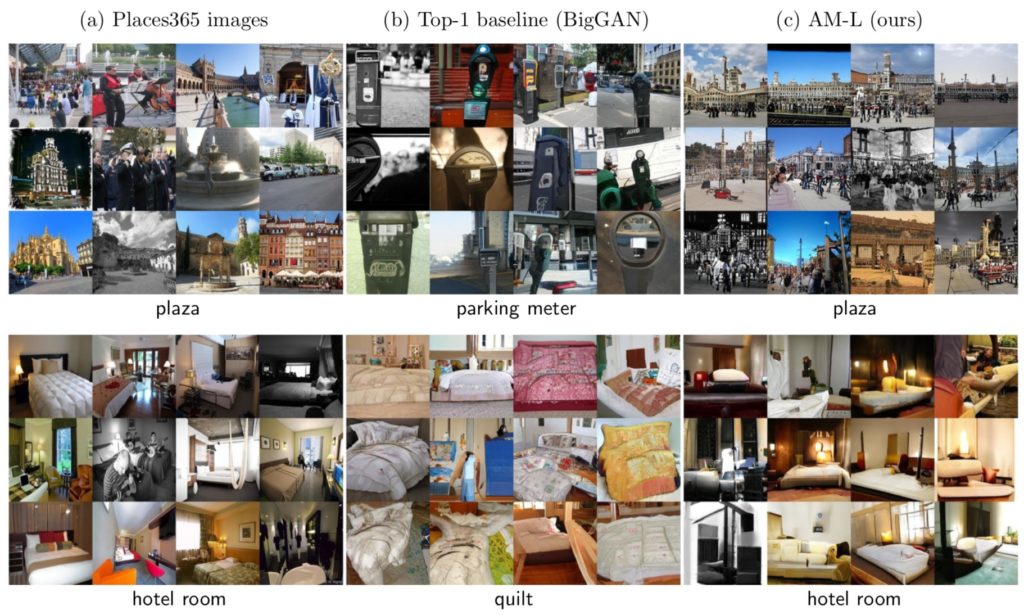

Figure 3: AM-L generated plausible images for two Places365 classes, hotel room (top) and plaza (bottom), which do not exist in the ImageNet training set of the generator. For example, AM-L synthesizes images of squares with buildings and people in the background for the plaza class (c) while the samples from the top-1 ImageNet class, here, parking meter, shows parking meters on the street (b). Similarly, AM-L samples for the hotel room class has the unique touches of lighting, lamps, and windows (c) that do not exist in the BigGAN samples for the quilt class (b).

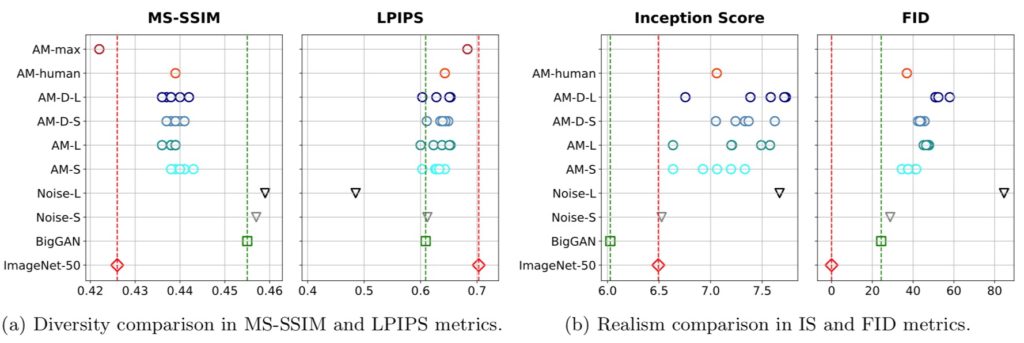

Figure 4: Each point in the four plots is a mean score across 50 classes from one AM optimization trial or one BigGAN model. The ultimate goal here is to close the gap between the BigGAN samples (– – – –) and the ImageNet-50 distribution (– – – –) in all four metrics. Naively adding noise degraded the embeddings in both diversity (MS-SSIM and LPIPS) and quality (IS and FID) scores i.e. the black and gray $\nabla$ actually moved away from the red lines. Our optimization trials, on average, closed the \emph{diversity} gap by $\sim$50\% \ie the AM circles are halfway in between the green and red dash lines (a). However, there was a trade-off between diversity vs. quality i.e. on the IS and FID metrics, the AM circles went further away from the red line (b).

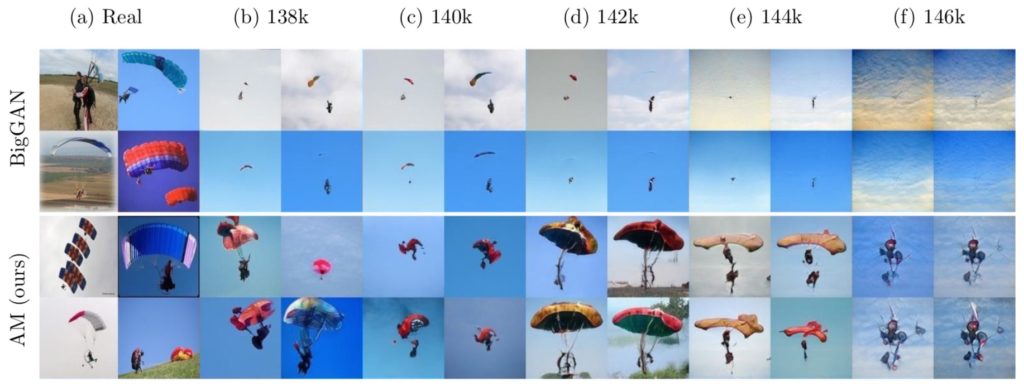

Figure 5: For the parachute class, the original $128\times128$ BigGAN samples (top panel) mostly contained tiny parachutes in the sky (b) and gradually degraded into blue sky images only (c–f). AM (bottom panel) instead exhibited a more diverse set of close-up and far-away parachutes (b) and managed to paint the parachutes for nearly-collapsed models (e–f). The samples in this figure correspond to the five snapshots (138k—146k).