Multi-agent spatiotemporal modeling is a challenging task from both an algorithmic design and computational complexity perspective. Recent work has explored the efficacy of traditional deep sequential models in this domain, but these architectures are slow and cumbersome to train, particularly as model size increases. Further, prior attempts to model interactions between agents across time have limitations, such as imposing an order on the agents or making assumptions about their relationships. In this paper, we introduce baller2vec, a multi-entity generalization of the standard Transformer that, with minimal assumptions, can simultaneously and efficiently integrate information across entities and time. We test the effectiveness of baller2vec for multi-agent spatiotemporal modeling by training it to perform two different basketball-related tasks: (1) simultaneously forecasting the trajectories of all players on the court and (2) forecasting the trajectory of the ball. Not only does baller2vec learn to perform these tasks well, it also appears to “understand” the game of basketball, encoding idiosyncratic qualities of players in its embeddings, and performing basketball-relevant functions with its attention heads.

Acknowledgment: This work is supported by the National Science Foundation under Grant No. 1850117.

Follow-ups:

- baller2vec++: A Look-Ahead Multi-Entity Transformer For Modeling Coordinated Agents (pdf | code)

- DEformer: An Order-Agnostic Distribution Estimating Transformer (pdf | code)

- Talk by Michael Alcorn on baller2vec

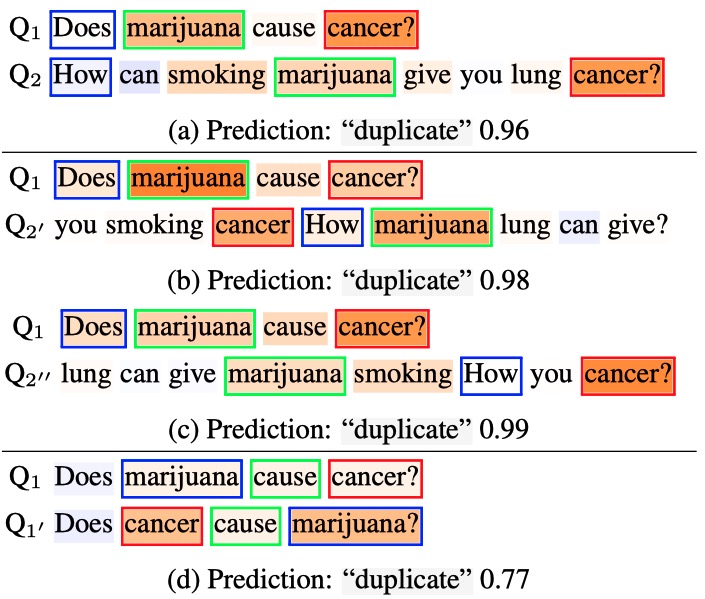

Figure 1: After solely being trained to predict the trajectory of the ball, given the locations of the players and the ball on the court through time, a self-attention (SA) head in baller2vec learned to anticipate passes (to the white, square player). See the paper for more details.

Figure 2: Nearest neighbors in baller2vec‘s embedding space are plausible doppelgängers. Credit for the images: Erik Drost, Keith Allison, Jose Garcia, Keith Allison, Verse Photography, and Joe Glorioso.

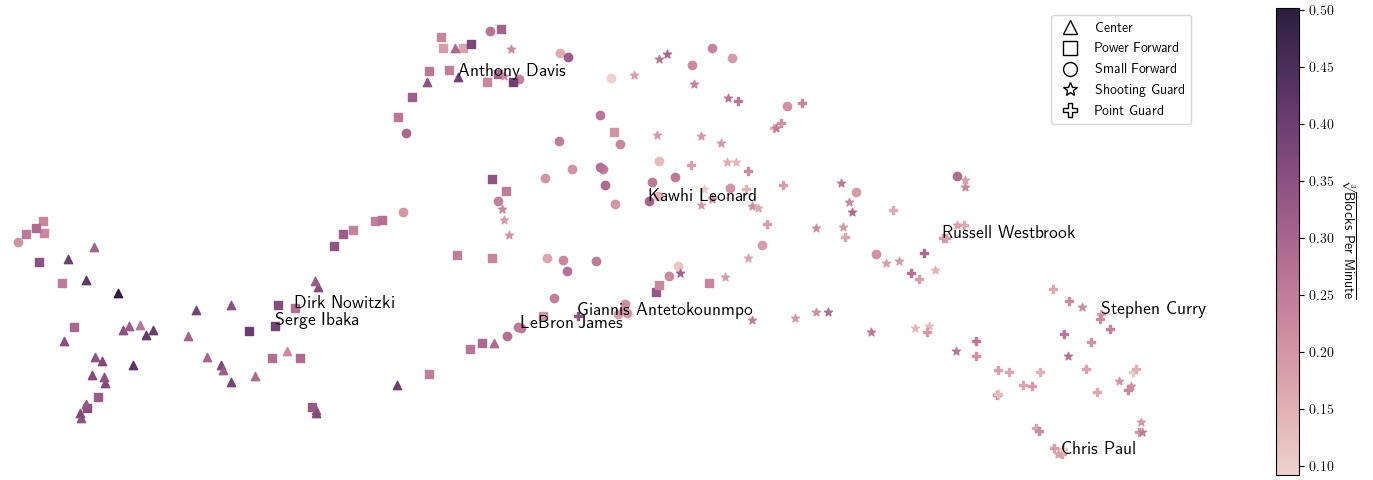

Figure 3: By exclusively learning to predict the trajectory of the ball, baller2vec was able to infer idiosyncratic player attributes (as

can be seen in this 2D UMAP of the player embeddings). The left-hand side of the plot contains tall post players (triangles , squares), e.g., Serge Ibaka, while the right-hand side of the plot contains shorter shooting guards (stars) and point guards (+), e.g., Stephen Curry. The connecting transition region contains forwards (squares, circles) and other “hybrid” players, i.e., individuals possessing both guard and post skills, e.g., LeBron James. Further, players with similar defensive abilities, measured here by the cube root of the players’ blocks per minute in 2015-2016 season (Basketball-Reference.com, 2021), cluster together.

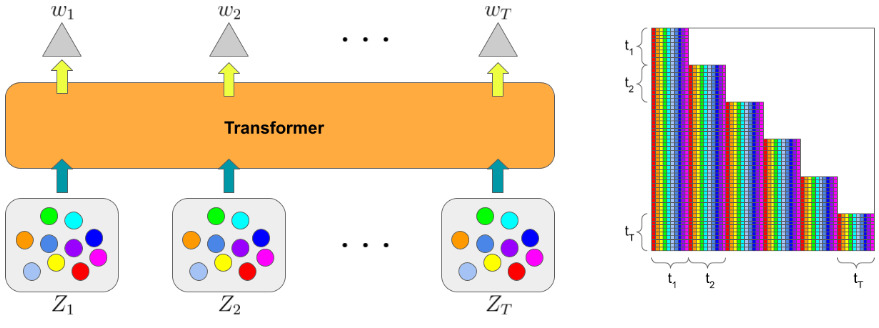

Figure 4: Left: the input for baller2vec at each time step t is an unordered set of feature vectors containing information about the identities and locations of NBA players on the court. Right: baller2vec generalizes the standard Transformer to the multi-entity setting by employing a novel self-attention mask tensor. The mask is then reshaped into a matrix for compatibility with typical Transformer implementations.