Our group is focused on Trustworthy and Explainable Artificial Intelligence (AI).

- Understanding the weaknesses of AIs and making them more robust & accurate in rare, unseen scenarios.

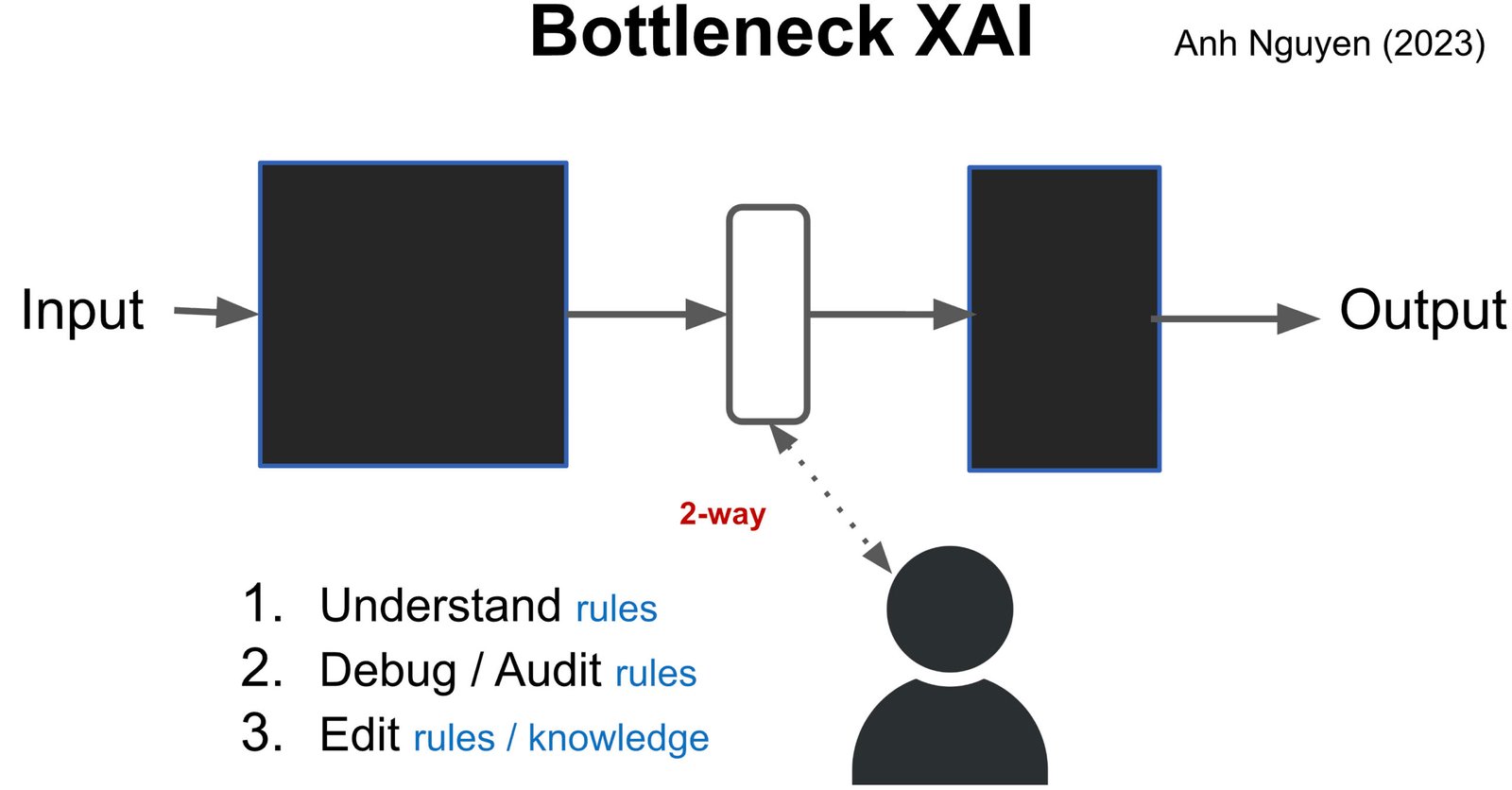

- We currently believe one approach to better accuracy in rare cases is to encourage AIs to explain themselves (where the exact formats of explanation depend on the application) by building an explainable bottleneck interface. This bottleneck may be (a) amid a network, (b) amid the entire multi-network system, or (c) at the output of a network. A key desideratum: Admit editable explanations where edits would causally change the output of AIs.

To test and explain AIs, we have explored many techniques:

- Making LLMs synthesize an interactive web interface (2026I) that explains its own answers (instead of raw block of text which is hard to read for users) to a given question.

- Making LLMs explain their answers on Excel/Spreadsheet questions by tracing through the intermediate table transformations (2025T) and explain their answers on QA questions by referencing facts in the input questions (2025H)

- Testing the capability of VLMs in out-of-distribution images that invite biased responses (2025B) or require basic vision skills (2024A)

- Building Siamese, change detection networks (2025A) for comparing images to explain semi-parametric models (e.g. between input and training-set images in k-NN) (2024P)

- Building Transformers with an attention bottleneck (2025)

- Evaluating CNNs and Transformers image classifiers (2015, 2019, 2023) and NLP text classifiers (2021), and vision-language models (2024C, 2024A)

- Building image classifiers with an explainable, editable, visual correspondence bottleneck explanations (2022C, 2022N, 2024E)

- Testing the effectiveness of explanations to human decision-making accuracy (2021, 2022, 2024)

- Leveraging image similarity models and training-set examples to improve image classification and explainability (2024)

- Inverting CNNs for explanation and synthesis (2017, 2022)

- Visualizing neural activations (2015) and counterfactual generations (2021)

- Feature visualization a.k.a. activation maximization (2016I, 2016N, 2020)

- Feature importance/attribution (2020C, 2020A, 2022AA, 2022A)

- Quantifying CNN preferences/biases (2020) and failures in natural (2019, 2021, 2024) and adversarial settings (2015, 2018)

🎖️ Sponsors

Our lab has been grateful to receive funding from:

- National Science Foundation CAREER (#2145767)

- NaphCare Charitable Foundation (gift)

- National Science Foundation CRII (#1850117)

- Adobe Research, Amazon, Google, Nvidia

The following prestigious fellowships have supported our students:

- AU Presidential Graduate Research Fellowships (PGRF): Giang Nguyen (2021), Huy Hung Nguyen (2023), Thang Truong (2025)

- Woltosz Fellowship: Michael Alcorn (2018), Hai Phan (2021)

- AL EPSCoR Graduate Research Scholar: Hai Phan (2022)

- AU Charles Gavin Research Fellowship: Pooyan Rahmanzadehgervi (2022)

Our partner industry companies have recruited our students for summer internships:

- Adobe Research: Michael Alcorn (2019), Thang Pham (2021), Hai Phan (2022), Thang Pham (2023, 2024), Mohammad Taesiri (2024)

- Bosch: Qi Li (2020), Renan Gomez (2021)

- Noteworthy AI: Peijie Chen (2023, 2024)

🧔🏻 Members

| Name | Status | Before Auburn | Internships during study |

|---|---|---|---|

| Thang Truong | AU PGRF Fellow Ph.D. student (2025 – present) | BS @ UT Austin (TX, USA) | |

| Pooyan Rahmanzadehgervi | AU Charles Gavin Research Fellow Ph.D. student (2022 – present) | MS @ Ferdowsi U of Mashhad (Iran) | |

| Tin Nguyen | Ph.D. student (2022 – present) | BS @ VNUHCM (Vietnam); MS @ Sejong U (Korea) | |

| Hung Huy Nguyen | AU PGRF Fellow Ph.D. student (2023 – present) | BS @ HUST (Vietnam); MS @ Seoul National U (Korea) | |

| Logan Bolton | Undergraduate student (2024 – present) | BS @ Auburn U | |

| Brandon Collins | Undergraduate student (2025 – present) | BS @ Auburn U; ACM President |

🎓 Alumni

| Name | Status | Before Auburn | Internships during Ph.D. | After PhD | Latest |

|---|---|---|---|---|---|

| Giang Nguyen | AU PGRF Fellow Ph.D. student (2021 – 2025) | BS @ HUST (Hanoi); MS @ KAIST (Korea) | JP Morgan 2024 | Research Scientist at Guides Lab | (2025) Research Scientist at Guides Lab |

| Thang Pham | Ph.D. student (2020 – 2024) | ML engineer @ AIST (Tokyo); BS @ VNUHCM (Vietnam) | 2021 Adobe, 2023 Adobe, 2024 Adobe | Sr. ML Engineer at Adobe Research, San Jose, CA. | (2025) Sr. ML Engineer at Adobe Research |

| Hai Phan | Woltosz Fellow, EPSCoR GRSF Fellow Ph.D. student (2021 – 2024) | MS @ Carnegie Mellon U (Pittsburgh); BS @ VNUHCM | 2022 Adobe; 2022 Meta | Research Engineer at Blue Marble Geographics | (2024) Research Engineer at Blue Marble Geographics |

| Peijie Chen | Ph.D. student (2019 – 2024) | BS @ Shenzhen U | 2022 Noteworthy AI; 2024 Noteworthy AI | Senior ML Engineer at Noteworthy AI | (2025) Senior ML Engineer at Noteworthy AI |

| Mohammad Reza Taesiri | Remote collaborator (2020 – 2024) | M.S. Sharif U | 2023 Ubisoft, 2024 Adobe | Ph.D. student at U Alberta | (2025) Research Scientist at EA Sports |

| Renan Alfredo Rojas Gomez | Ph.D. student (2021-2023) co-advised with Prof. Minh Do at UIUC | 2021 Bosch, 2024 DeepMind | ML Engineer at Apple, Cupertino, CA. | (2025) ML Engineer at Apple, Cupertino, CA. | |

| Michael Alcorn | 2018 Woltosz Fellow

Ph.D. student (2018-2021) |

ML engineer @ Red Hat; MS @ UT Dallas; BS @ Auburn | 2019 Adobe

2020 Cleveland Indians; |

Postdoc at USDA | (2025) Senior ML Engineer Bear Flag Robotics |

| Qi Li | M.S. student (2018-2021) | BS @ Southeast U (Nanjing) | 2020 Bosch intern | (2025) Assistant Professor, Fisk University | |

| Chirag Agarwal | Ph.D. student (2018-2020) | MS @ U Illinois at Chicago | 2017 Kitware intern

2018 Tempus labs intern 2018 Bosch intern |

Postdoc at Harvard, Research Scientist at Adobe Research | (2025) Assistant Professor at UVA |

| Naman Bansal | M.S. student (2018-2020) | BS @ IIT-G | 2022 Facebook/Meta intern | (2025) Software Engineer at Google | |

| Zhitao Gong | Ph.D. student (2017 – 2019) co-advised with Prof. Wei-shinn Ku | BS @ Nanjing U | 2017 Google intern

2018 Facebook intern |

Research Engineer at DeepMind | (2025) Research Engineer at DeepMind |

| Nader Akoury | Remote collaborator (2017 – 2018) | BS @ Duke; Software Engineer at Yelp | Ph.D. student at U Mass Amherst | ||

| Vishaal Kabilan | Research Intern (2017 – 2018) | MS @ Worcester Polytechnic | Data scientist at IBM Bangalore | ||

| Brandon Morris | B.S. student (2017 – 2018) | DoD SMART Ph.D. student at ASU |